Preface

Welcome to Hands On Data Structures! Basically, data structures are the cornerstone in computer science as all operations in computer are virtually to manipulate data efficiently.

So, what is a data structure exactly? Wikipedia offers a working definition:

In computer science, a data structure is a data organization, management, and storage format that is usually chosen for efficient access to data.

Suppose you are a librarian and there are more than 10 thousand books. Then how to organize and manage those books is of importance to you, because readers expects they are able to find the book they love efficiently. Nobody wants a mess!

In this book, you will learn to organize/manage those data (e.g., books) by designing efficient data structures. Let's stick to the analogy of being a librarian. As we can see, how to fetch/add/remove books is also an essential part of your daily work. In computer science, it is called algorithms, which describes how to do something step by step in computers. Furthermore, we can obtain such interesting equation1:

\[ Programs = Data\ Structures + Algorithms \]

It is obvious that data structures and algorithms are highly inherently relevant with each other. Without data structures, it is unlikely to design efficient algorithms. To some extent, algorithms can be regarded the application of data structures. However, a thorough introduction to algorithms, mainly including algorithms design and analysis, is out of the scope of this book. As a result, we focus on some basic data structures, and only a handful of parts are dedicated to pure algorithms.

Note that there are many awesome books available about data structures and algorithms, and this book is just a follower of them. Just to name a few:

- Introduction to Algorithms by Thomas H. Cormen, Charles E. Leiserson, Ronald L. Rivest, and Clifford Stein. It is known as CLRS.

- Algorithms by Robert Sedgewick, Kevin Wayne.

- Data Structures & Algorithms in Java by Michael T. Goodrich, Roberto Tamassia, Michael H. Goldwasser.

- Data Structures & Algorithms in Python by Michael T. Goodrich, Roberto Tamassia, Michael H. Goldwasser.

- Data Structures and Algorithm Analysis in Java by Mark A. Weiss.

It is highly recommended reading those masterpieces (especially CLRS and Algorithms) if you want to have an in-depth understanding, for this book is tailored for students in SWUFE by eliminating or simplifying many obscure parts.

The motivation of this book is to help students obtain hands-on skills in terms of data structures by putting emphasis on coding and programming itself. It is also worthwhile to note that data structures are not limited to some specific programming languages. In this book, we will focus on the object-oriented way, and cover the implementations in both Java and Python.

1 Algorithms + Data Structures = Programs is a 1976 book written by Niklaus Wirth covering some of fundamental topics of computer programming, particularly that algorithms and data structures are inherently related.

Fundamentals

In this chapter, we will take a quick glance at data structures.

Introduction

Let's continue to use the analogy of being a librarian, so you have to design an efficient way to manage the large amount of books. Suppose we need only to care about the names of books, we can use an array in Java or a list in Python:

String[] books = {"Gone with the Wind", "Hands on Data Structures"};

books = ["Gone with the Wind", "Hands on Data Structures"]

But, the capabilities of different data structures vary considerably. For example, an array in most programming languages is in a fixed size. To put it another way, you cannot add or remove any item in it, and this feature is ridiculous for a general library management system.

Given the pitfall of an array, can we always prefer to a list? The answer is NO! Because trade-offs are ubiquitous in computer science, different data structures have their own pros and cons, and we should choose an appropriate data structure by considering the specific context. As for an array, it is the best due to its efficiency if we have already known that the size of items won't be changed.

By the way, if we would like the feature of adding/removing in Java, we can use ArrayList in Java alternatively:

List<String> books = new ArrayList<>();

books.add("Gone with the Wind");

books.add("Hands on Data Structures");

Now let's have a look at the term "efficiency", which is the motivation of designing new data structures and algorithms. Generally speaking, we mainly care about the two kinds of efficiency:

- Time: A program that costs less time is considered as a better one.

- Space: A program that costs less memory/disk1 is considered as a better one.

Here is an example about time efficiency. If you are asked to find the book titled "Gone with the Wind", how do you do? You probably would check each item one by one in the list (or array). In the best case when you are lucky enough, the desired book is the first one, so the checking operation is done in only one time. But in the worst case, the desired one is the last, so the checking operation is done in N times, where N is the number of the books. And on average case, this operation is expected to be done in N/2 times. As we can see, this basic data structure can be time-consuming sometimes.

A natural question is: can we find a data structure that is capable of finding a desired book in only one time in all cases? The answer is YES, but please keep in mind that there might be some trade-offs. In other words, a new data structure with better time efficiency may be shipped with other pitfalls. Therefore, it is the programmer's responsibility to choose an appropriate data structure as well as algorithm according to difference contexts. On the other hand, we are always aiming to find good data structures and algorithms which are efficient in most cases practically and theoretically.

Last but not the least, for most programmers, they don't really have to design innovative data structures or algorithms, because most the languages and third packages/libraries have provided a great many on-shelf data structures and algorithms. Then why do we still need to learn this course? There are mainly two-fold reasons:

- Understanding how a data structure and algorithm work behind the scenes is necessary when choosing an appropriate one.

- You should be a creator, not just a user. Sometimes, there is no handy data structures or algorithms available, so you have to design a new one.

1 In this book, we mainly discuss the memory overhead of a data structure or algorithm.

Java Built-in Data Structures

In this section, we will introduce arrays and three essential data structures (often referred as collections) in Java. A complete tutorial can be found here.

A collection — sometimes called a container — is simply an object that groups multiple elements into a single unit. Collections are used to store, retrieve, manipulate, and communicate aggregate data.

Collections (e.g., ArrayList mentioned in the last section) are indispensable ingredients in our daily programming work, and they represent data items that form a natural group.

Array

An array is a low-level data structure in Java, instead of a kind of collection. Generally, if you don't have adequate reasons to choose an array, then a list is always preferred. But sometimes a solid understanding about arrays is necessary if you want to design you own data structures. See more at Arrays.

The method that we use to refer to individual values in an array is numbering and then indexing them. If we have N values, we think of them as being numbered from 0 to N-1.

Creating and initializing an array

Making an array in a Java program involves three distinct steps:

- Declare the array name and type.

- Create the array

- Initialize the array

The long form is to specify those three steps explicitly:

double[] a; // declare

a = new double[N]; // create

for (int i = 0; i < N; i++) {

a[i] = 3.14; // initialize

}

And the declaration and creation can be written in a short form:

double[] a = new double[N];

Furthermore, those three steps can be even shortened into one line:

double[] a = { 3.14, 3.15, 3.16 };

Note that like most programming languages, Java would provide default values even if you do not initialize them, but it is a bad practice to rely on the compiler. So please make an initialization explicitly before using an array.

Accessing an array

We can access an array via indexing:

double d = a[0]; // get the first item

a[0] = 42.0; // update the first item

You should pay attention to the indexing range, because it will raise an OutOfBoundsException if you use the index less than 0 or greater than N - 1.

Iterating an array

You can use the tradition for loop to iterate an array:

for (int i = 0; i < N; i++) {

System.out.println(a[i]);

}

But for modern Java, you should prefer to the enhanced for-each, since it is likely to make the OutOfBoundsException using the traditional method:

for (double d : a) {

System.out.println(d);

}

Generics and arrays

Suppose you have developed a Library class which manages books:

public class Library {

private Book[] books;

public void add(Book book) {

...

}

...

}

Then how can you reuse this class to manage other types, let's say Fruit for a grocery? A naive method is to repeat the code:

public class Grocery {

private Fruit[] fruits;

public void add(Fruit fruit) {

...

}

...

}

There is a golden rule in programming: don't repeat yourself, so we would like to manage either Book or Fruit using one piece of code. Since every class in Java is inherited from Object, what about Object[]? Well, it is feasible, but it is also error-prone, because it cannot prevent users adding both books and fruits to our system at the same time. To solve this problem, you should use generics. Generics have been a part of the language since Java 5, and see more at Generics.

But keep in mind that arrays and generics do not mix well. For example, the following code will result in generic array creation at compile time:

T[] elements = new T[N];

Here I propose one solution to this problem. To manage different types of items, the Manager class accepts generics types (The complete code can be found at Manager.java):

public class Manager<E> {

private E[] elements;

private int size = 0;

private static final int INIT_CAPACITY = 16;

@SuppressWarnings("unchecked")

public Manager() {

elements = (E[]) new Object[INIT_CAPACITY];

}

public void add(E item) {

...

}

...

}

Since casting an Object[] to E[] is unsafe, we use @SuppressWarnings("unchecked")1 to tell the compiler that I know what I am doing, and please don't give me any warning. Don't get frustrated if you don't fully understand what the code above means, and we will go back to discuss the code in detail later. What you need to know now is to realize the importance of generics and possible traps when combining generics and arrays.

By the way, it is very straightforward to make use of the generic class:

Manager<Book> library = new Manager<>();

library.add(new Book("Gone with the wind", "Margaret Mitchell"));

library.display();

Common operations of arrays

As a Java programmer, you should get familiar with java.util.Arrays which offering plenty of handy methods. You can refer to JDK API Doc, and I will introduce some most commonly used methods in the following. Note that all methods are static.

asList(T... a): Returns a fixed-size list backed by the specified array2.

List<String> books = Arrays.asList("Gone with the Wind", "Hands on Data Structures");

fill(int[] a, int val): Assigns the specified int value to each element of the specified array ofints.

int[] arr = new int[100];

Arrays.fill(arr, 42);

copyOf(int[] original, int newLength): Copies the specified array, truncating or padding with zeros (if necessary) so the copy has the specified length.

int[] arr = {1, 2, 3};

// expand its size to 6

arr = Arrays.copyOf(arr, 6);

arr[3] = 4;

sort(int[] a): Sorts the specified array into ascending numerical order.

int[] arr = {1, 9, 4, 2};

Arrays.sort(arr);

// now it becomes {1, 2, 4, 9}

List

Here I mainly talk about java.util.ArrayList, which is a resizable-array implementation of java.util.List. Keep in mind that never use raw types for collections, including List, Set and Map, and make friends with generics!

List<String> books = new ArrayList<>(); // generics

List fruits = new ArrayList(); // don't do this!

Basically, you can regard List as a more powerful array, and you are required to know and practice at least those methods:

add()addAll()clear()contains()get()indexOf()iterator()remove()size()toArray()

And of course, prefer to the enhanced for-each if you need to make an iteration over a list. Some legacy code may use iterator() method manually, but it is no longer preferred today. This suggestion also holds true other collections like Map and List.

Collections

List is a sub-interface of Collection. Like java.util.Arrays, java.util.Collections provides plenty static handy methods. For example,

sort(List<T> list): Sorts the specified list into ascending order, according to the natural ordering of its elements.

List<Integer> list = Arrays.asList(1, 9, 4, 2);

Collections.sort(list);

// now list is [1, 2, 4, 9]

reverse(List<?> list): Reverses the order of the elements in the specified list.

List<Integer> list = Arrays.asList(1, 9, 4, 2);

Collections.reverse(list);

// now list is [2, 4, 9, 1]

max(Collection<? extends T> coll): Returns the maximum element of the given collection, according to the natural ordering of its elements.

List<Integer> list = Arrays.asList(1, 9, 4, 2);

int v = Collections.max(list); // v is 9

Map

Here I mainly talk about java.util.HashMap. Map can be used to represent a mapping between a key and a value, and we assume that keys are unique. For example:

| Key | Value |

|---|---|

| Name | Age |

| ISBN | Price |

| City | Country |

| Country | GDP |

Different from List, a map should manage a collection of pairs. Let's consider a shopping cart which maintains pairs of ISBN (key) and amount (value).

Map<String, Integer> cart = new HashMap<>();

Here we should specify the types of key and value as generics, respectively. To some extent, we can imagine a list as a map whose key is implicitly the index.

To add an item (three books whose ISBN is 7801) into this cart:

cart.put("7801", 3);

To get an item by its key (i.e, ISBN):

int amount = cart.get("7801");

Then what if the key does not exist? This is left as an exercise for readers. Now let's make an iteration over a map.

for (Map.Entry<String, Integer> entry : cart.entrySet()) {

System.out.println(entry.getKey() + " : " + entry.getValue());

}

You are required to know and practice at least those methods:

clear()containsKey()entrySet()get()getOrDefault()isEmpty()keySet()put()remove()size()values()

Set

Here I mainly talk about java.util.HashSet. Unlike a list, items in a set are unique.

Set<String> set = new HashSet<>();

set.add("hello");

set.add("world");

System.out.println(set.size()); // 2

set.add("hello");

System.out.println(set.size()); // 2

Duplicate items are not allowed in a set, so the last statement will still print 2. Again, let's make an iteration:

for (String s : set) {

System.out.println(s);

}

Another key fact is that there is no order defined in a set, which is essentially set used in mathematics. For example, (0, 1, 2) and (1, 0, 2) are equivalent.

Set<Integer> a = new HashSet<>(Arrays.asList(0, 1, 2));

Set<Integer> b = new HashSet<>(Arrays.asList(1, 0, 2));

System.out.println(a.equals(b)); // true

In addition, we can also conclude that indexing in a set is meaningless.

You are required to know and practice at least those methods:

add()clear()contains()isEmpty()remove()size()

1 This line is optional, and it is fine to remove it.

2 Unlike regular lists, the one returned by asList() is a fixed-size one, so we cannot add/remove items on it.

Python Built-in Data Structures

In this section, we will introduce three essential data structures in Python. You can find more in Data Structures from Python's official tutorial and Common Python Data Structures .

In addition, a quick overview about the type hinting in Python will also be covered.

List

Python's list resembles Java's ArrayList, representing a resizable array of items.

num = [1, 9, 4, 2]

Since Python is a weak-type (and dynamic-type) language, you can even put items with different types in a list:

num = ['1', 9, 'four', 2]

But it is not recommended. If you need to store items with mixing types, you can resort to a tuple. Note that tuples are immutable, while lists are mutable.

# t = 'one', 9

t = ('one', 9)

Python is featured by its indexing and slicing. For example,

print(num[0])

print(num[0:2])

print(num[:])

print(num[-1])

print(num[2:-1])

As for the iteration, Python's for is convenient for iterable objects:

for i in a:

print(i)

Sometimes, we would like the index as well as its value while iterating a list:

for i, v in enumerate(a):

print(f"Index of {i} is {v}")

You are required to know and practice at least those methods:

append()extend()insert()remove()pop()clear()sort()reverse()

Another nice feature in Python is the list comprehension, providing a concise way to create lists:

squares = [x**2 for x in range(10)]

By taking the leveraging of Java's Stream API, we can achieve a similar but wordy implementation1:

List<Integer> squares = IntStream.range(0, 10).map(x -> x * 2).boxed().collect(Collectors.toList());

Wow! Python saves a lot of keystrokes.

Note that in Java we use the size() method to get the size of an ArrayList, but the len() method in Python is not defined on a list itself. So you will see the following error if a.len() is used:

Traceback (most recent call last): File "

", line 1, in AttributeError: 'list' object has no attribute 'len'

Instead, you should use len(a) to get the size of list a.

To test whether an item is in a list or not, we can use in (the opposite is not in) operator, while contains() method is used in Java:

num = [1, 9, 4, 2]

print(1 in num) # True

print(3 in num) # False

print(3 not in num) # True

Set

Python's set assembles Java's HashSet, representing an unordered collection of items without duplication. Note that to create an empty set you have to use set(), not {}.

words = {'hello', 'world', 'hello'}

print(words)

print(len(words))

The results will show that duplicates have been removed. By the way, the weak-type of Python makes it also possible to put mixing types in a set. Again, it is not recommended.

The key fact is that there is no order defined in a set, which is essentially set used in mathematics. For example, {0, 1, 2} and {1, 0, 2} are equivalent.

a = {0, 1, 2}

b = {1, 0, 2}

print(a == b) # True

In addition, we can also conclude that indexing in a set is meaningless.

You are required to know and practice at least those methods:

add()clear()discard()pop()remove()union()update()

Like the list, iterations via for, len(), in (not in), and set comprehensions are also supported.

Dictionary

Python's dict assembles Java's HashMap, representing a collection of key-value pairs. Dictionaries can be used to represent a mapping between a key and a value, and we assume that keys are unique. For example:

| Key | Value |

|---|---|

| Name | Age |

| ISBN | Price |

| City | Country |

| Country | GDP |

Different from the list, a dict should manage a collection of pairs. Let's consider a shopping cart which maintains pairs of ISBN (key) and amount (value).

cart = {'7801': 3, '9902': 1}

To add another item into this cart:

cart['8809'] = 1

To remove an item by its key:

del cart['7801']

To get the value via a key:

v = cart['9902']

v = cart.get('9902')

v = cart.get('9902', 0)

To some extent, we can imagine a list as a map whose key is implicitly the index. Readers can explore the differences between indexing syntax and get() to obtain a value.

You are required to know and practice at least those methods:

clear()get()items()keys()pop()update()values()

We can use in (not in) to test whether a key exists, and len() and dict comprehensions are also supported.

print('0011' in cart) # False

print('9902' in cart) # True

The syntax to iterate a dict is slightly different:

for k, v in cart.items():

print(k, v)

Collections

The collections module implements specialized container data types providing alternatives to Python’s general purpose built-in containers (i.e., list, tuple, set, and dict). In the following, I will introduce defaultdict which can supply missing values based on dict.

from collections import defaultdict

cart = defaultdict(int)

print(cart['8899'])

cart['3344'] += 3

print(cart['3344'])

Readers can compare its usage with the regular dict.

Type hinting

Python is featured for its dynamic typing and duck typing.

If it walks like a duck and it quacks like a duck, then it must be a duck.

But sometimes for large projects, the code will become more maintainable if the typing is specified, and the typing module make it possible.

def say_hello(name: str) -> str:

return f'Hello {name}'

This wordy version is more readable by providing the types of the parameter and returned value, and programmers who use static typing languages (e.g., Java) would feel more conformable. The following code is implemented with Java:

public String sayHello(String name) {

return String.format("Hello %s", name);

}

It is worthy to note that the Python runtime does not enforce function and variable type annotations. So it is still okay to pass a non-str value (e.g., an integer) to say_hello():

hi = say_hello(42)

In contrast, your code won't be complied if you use sayHello(42) in Java.

Since Python is a duck typing language, it will check for the presence of a given method or attribute. For example,

def say_length(name: str) -> str:

return f'Hello {len(name)}'

In this case, Python will expect that len() can be applied on the parameter. So say_length(42) will throw a TypeError, while the following code works well:

say_length('data')

say_length([1, 9, 4, 9])

say_length({'S', 'W', 'E', 'N'})

Type hinting is very helpful for large projects, but for simplicity, I will not use this feature for most cases throughout this book.

1 Java 16 brought a shorter toList() method.

Algorithm Analysis

How fast is a program?1 If the (time) efficiency is our major concern, then we have to ask this question regularly.

A hands-on method

A straightforward way is to measure the elapsing time. In the following, we will use a tiny program (Fibonacci.java and fibonacci.py) to compute the nth fibonacci number.

Java

In Java, there are many APIs available in terms of time/date, including System.currentTimeMillis(), Instant.now. You can use either of the following method2 to compute the elapsing time in milliseconds:

long start = System.currentTimeMillis();

// your program runs

long end = System.currentTimeMillis();

long elapse = end - start;

long start = Instant.now().toEpochMilli();

// your program runs

long end = Instant.now().toEpochMilli();

long elapse = end - start;

Instant start = Instant.now();

// your programs runs

Instant end = Instant.now();

long elapse = Duration.between(start, end).toMillis();

A more robust and comprehensive approach in Java is to use JMH, and the usage of it is out of the scope of this book.

Python

In Python, several modules can be used to deal with time/date, including time, datetime. But note that time.time() returns a float number representing the current time in seconds since the Epoch. You can use either of the following code to compute the elapsing time in milliseconds:

start = int(round(time.time() * 1000))

# your program runs

end = int(round(time.time() * 1000))

elapse = end - start

start = datetime.datetime.now()

# your program runs

end = datetime.datetime.now()

elapse = int(round((end - start).total_seconds() * 1000))

Generally, to avoid the variability of the running time, we usually run the program for several times and then take the average. Luckily, the built-in timeit makes it simple.

Visualize the efficiency

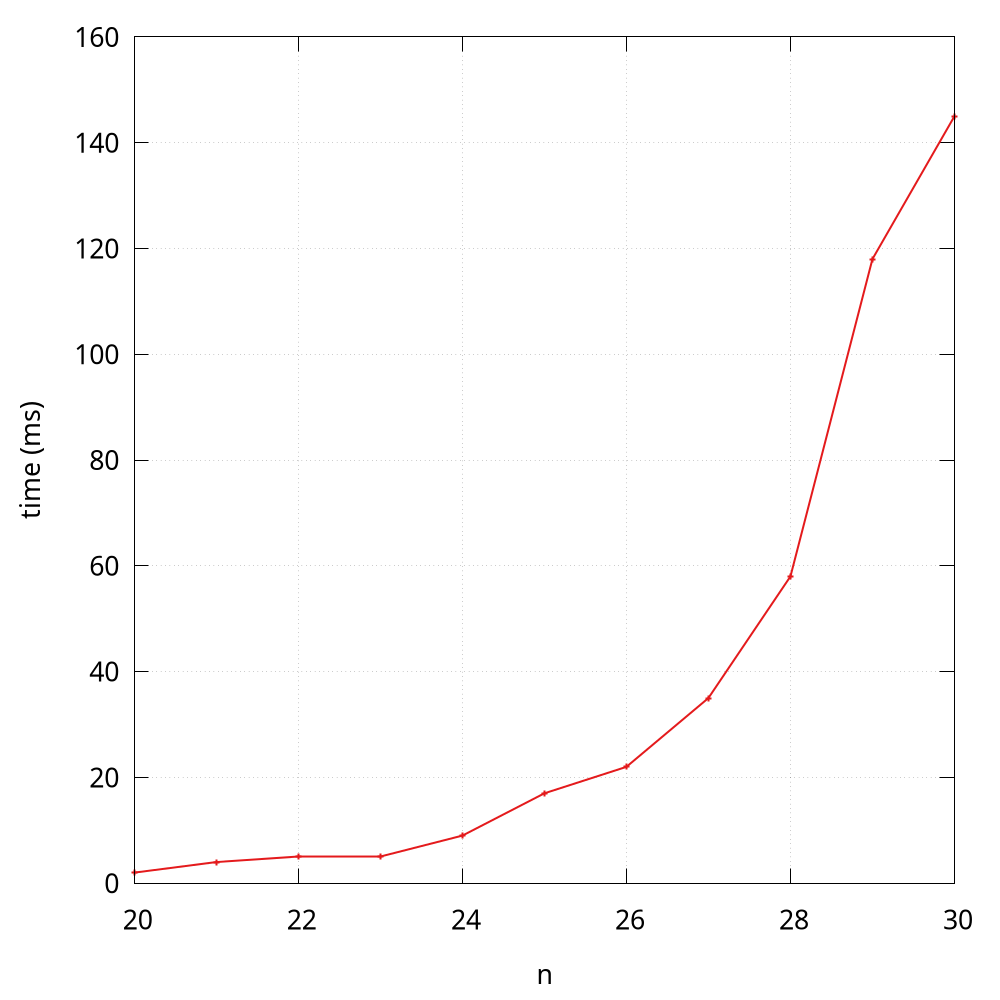

Sometimes, we would like to visualize the quantitative measurements of the running time of our programs. A common qualitative observation about most programs is that there is a problem size

that characterizes the difficulty of the computational task. For example, fibonacci(30) will definitely coast more time than fibonacci(3). Here the parameter (i.e., 3 and 30) can be seen as the problem size.

A simple way is to save (size, time) per line in a file (FibTime.java and fib_time.py), and then to visualize results using tools like gnuplot, ggplot2 in R, Matplotlib in Python, and Plotly Express in Python.

Many people tend to plot the data from the memory directly, but plotting the data from a file is more common in practice. Therefore, we deliberately separate the data computing and plotting in this book.

Here I use the Python version as the example:

if __name__ == '__main__':

ns = [20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30]

with open('fib_python.txt', 'w') as f:

for n in ns:

start = int(round(time.time() * 1000))

fibonacci(n)

end = int(round(time.time() * 1000))

f.write(f'{n} {end - start}\n')

Note that here the problem size starts from 20, because the time is near to 0 when the problem size is less than 20.

According to the observation3, some students may quickly make an insightful hypothesize that the running time is at an exponential growth, and I will analyze it through a mathematical model soon. By the way, the Java version of Fibonacci is much faster than the Python version.

Mathematical models

D.E. Knuth postulated that, despite all the complicating factors in understanding the running times of our programs, it is possible, in principle, to build a mathematical model to describe the running time of any program. Knuth’s basic insight is simple: the total running time of a program is determined by two primary factors:

- The cost of executing each statement

- The frequency of execution of each statement

The former is a property of the computer and the language itself, and the second one is a property of the program and the input. The primary challenge is to determine the frequency of execution of the statements. Here are some examples:

def foo(n):

for i in range(n):

print(i)

The frequency of the inner statement of this function is n.

Sometimes, frequency analysis can lead to complicated and lengthy mathematical expressions. Consider the two-sum problem, and the following is a naive solution:

class Solution:

def two_sum(self, nums, target):

for i, v in enumerate(nums):

for j in range(i + 1, len(nums)):

if v + nums[j] == target:

return [i, j]

return []

The frequency of the inner comparison test statement of this function is (n-1) + (n-2) + ... + 1 in the worst case:

\[ \frac{n \times (n-1)}{2} = \frac{n^2 - n}{2} \]

Curious readers can try to implement a faster algorithm for this problem4. Note that here I put emphasis on the worst case, because if you are lucky enough, the sum of the first and second item equals target, then the frequency in the best case is only 1.

As for the notation \(\frac{n^2 - n}{2}\), we can know that it is the \(\frac{n^2}{2}\) that plays a major rule in terms of the growth, so we can say \(\frac{n^2}{2}\) approximates to \(\frac{n^2 - n}{2}\). In addition, when it comes to the order of growth, the constant here (i.e., 1/2) is also insignificant. The following shows some typical approximations:

| Function | Approximation | Order of growth |

|---|---|---|

| \(N^3/6 - N^2/2 + N/3\) | \(N^3/6\) | \(N^3\) |

| \(N^2/2 - N/2\) | \(N^2/2\) | \(N^2\) |

| \(lg{N} + 1\) | \(lg{N}\) | \(lg{N}\) |

| 3 | 3 | 1 |

The order of growth in the worst case can be also described by the big O notation, e.g., \(O(n^3)\). The following shows some commonly encountered time complexity using the big O notation:

| Description | Time complexity |

|---|---|

| constant | \(O(1)\) |

| logarithmic | \(O(log{N})\) |

| linear | \(O(N)\) |

| linearithmic | \(O(N\log{N})\) |

| quadratic | \(O(N^2)\) |

| cubic | \(O(N^3)\) |

| exponential | \(O(2^N\)) |

For example, we can say

- the complexity of

foo()is \(O(N)\) - the complexity of

two_sum()is \(O(N^2)\)

Case study: Fibonacci

Based on the observation, we make an insightful hypothesize that the running time of recursive implementation fibonacci is at an exponential growth.

According to the code, we know that in order to computer fibonacci(n), it is required to compute fibonacci(n - 1) and fibonacci(n - 2). If we use \( T(n) \) to denote the time used to compute fibonacci(n), then we have:

\[T(n) = T(n-1) + T(n-2) + O(1)\]

The \(O(1)\) here means the addition operation. You can prove that \(T(n) = O(2^n)\)5, and the visualization plotting also validates this theoretical result.

1 Another important question is "Why does my program run out of memory?", which cares about the space efficiency.

2 See more at https://stackoverflow.com/questions/58705657/.

3 This figure is drawn by gnuplot and the code can be found at lines.gp.

4 It is possible to design faster algorithms with \( O(N\log{N}) \) and even with \( O(N) \).

5 See more at https://www.geeksforgeeks.org/time-complexity-recursive-fibonacci-program/.

Test Driven Development

Correctness in our programs is the extent to which our code does what we intend it to do. So, how can we guarantee that our code is correct?

Let's consider that Fibonacci sequence program again. Most beginners would adopt the traditional print-check method manually.

print(fibonacci(0)) # expect: 0

print(fibonacci(1)) # expect: 1

print(fibonacci(2)) # expect: 1

print(fibonacci(3)) # expect: 2

print(fibonacci(4)) # expect: 3

print(fibonacci(5)) # expect: 5

...

But this method is somewhat inefficient, let alone being automatic. In this section, I will introduce a better approach.

Assert

An assertion allows testing the correctness of any assumptions that have been made in the program, and once an assert fails, the program will crash. So, assertions can be used for testing. See more at Assertions in Java and Python's assert.

Java

Fibonacci f = new Fibonacci();

assert f.fibonacci(5) == 5;

And you can even provide additional message for an assertion:

assert f.fibonacci(5) == 5 : "Error when n is 5";

Note that you need to add -ea option to enable assertions. In Intellij IDEA, it can be set in Run > Edit Configurations... > Configuration > VM options.

Python

assert fibonacci(5) == 5

And you can even provide additional message for an assertion:

assert fibonacci(5) == 5, 'Error when n is 5'

Unit tests

Testing is a complex skill: we can’t cover every detail about how to write good tests.

For modern software engineering, the test driven development (TDD) is widely adopted, and unit tests serve as an essential component in TDD. To put it simply, the key points of TDD include:

- Write tests before real implementations. It may sound ridiculous, but it is feasible as long as you can determine the input and expected output of a program. As for the Fibonacci sequence, we can expect that

fibonacci(5)equals 5 before implementing the code. - Write tests for every public API. Here the every implies unit; in other words, we shall make sure every API works as we expect.

- Isolate tests from core code. The naive assertions1 are often messed with the core code, but unit tests are placed separately. This feature is very productive for teamwork, as developers and testers are able to cooperate with each other smoothly.

Due to the importance of unit tests, I will use unit tests when designing data structures throughout this book from now on.

Java

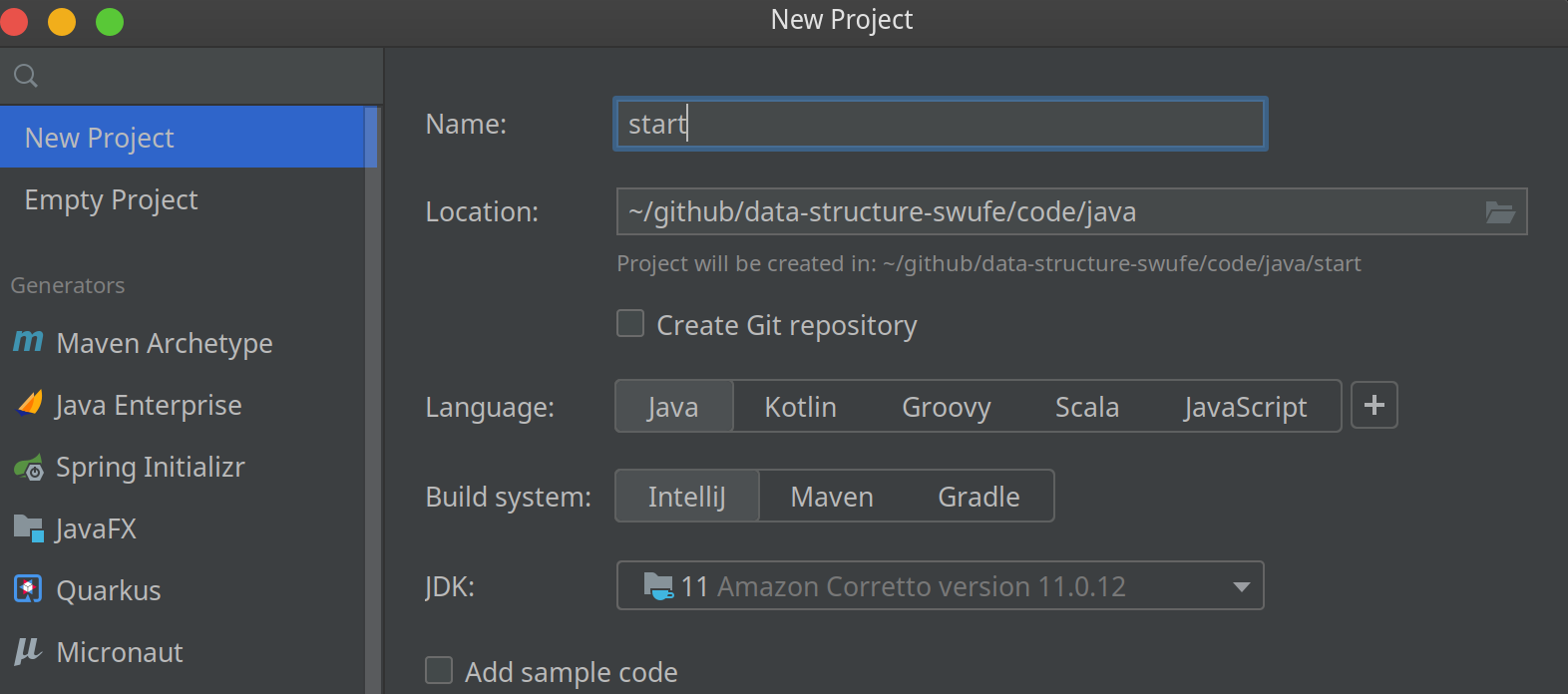

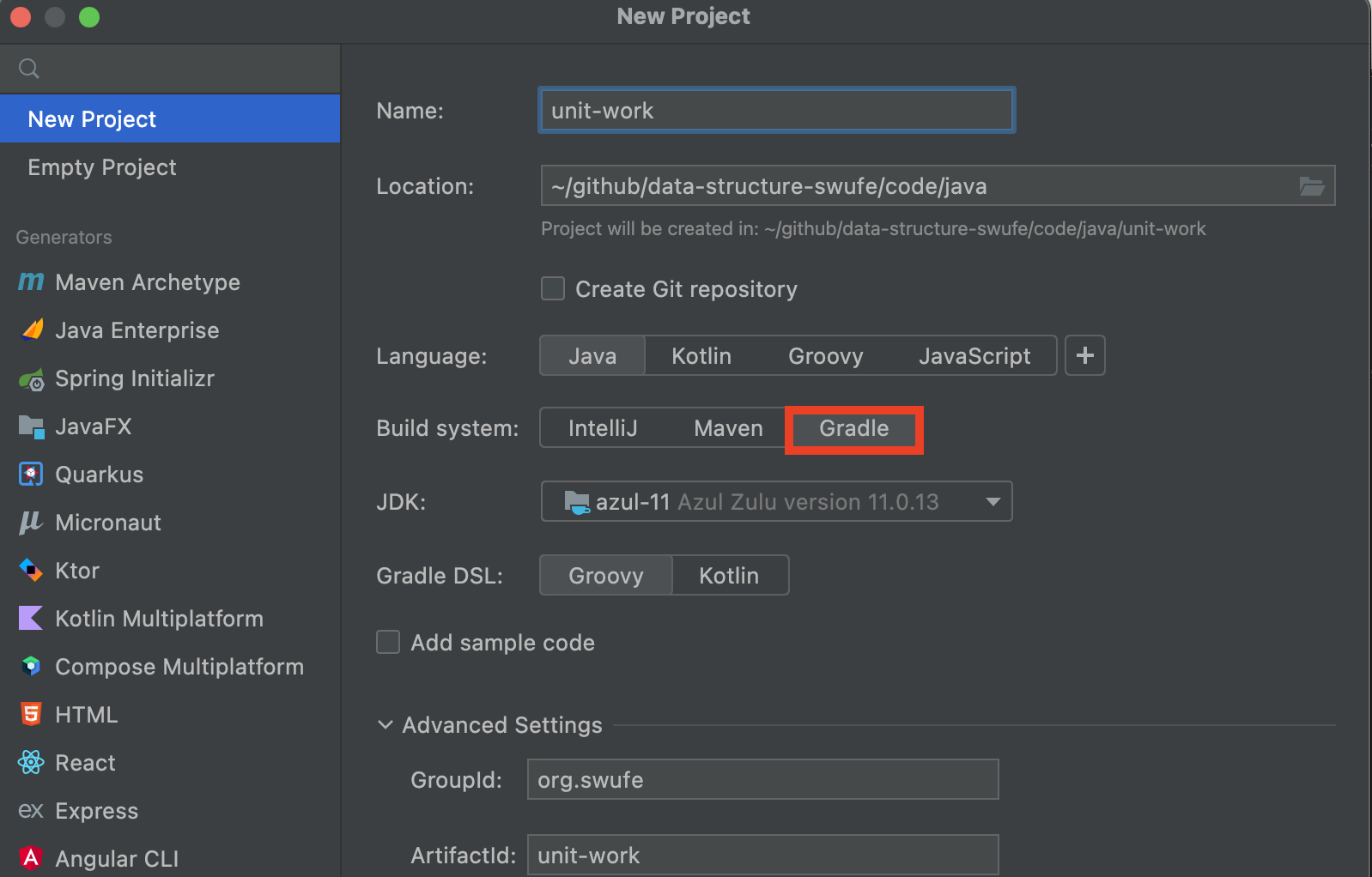

There are several awesome unit testing frameworks for Java, and the most popular one is JUnit 5. In order to integrate this third library in our project, we are going to use gradle as the build system to facilitate our work. Please refer to Appendix for more information.

In Add.java, we have provided a simple method for two numbers' addition:

public class Add {

public int add(int a, int b) {

return a + b;

}

}

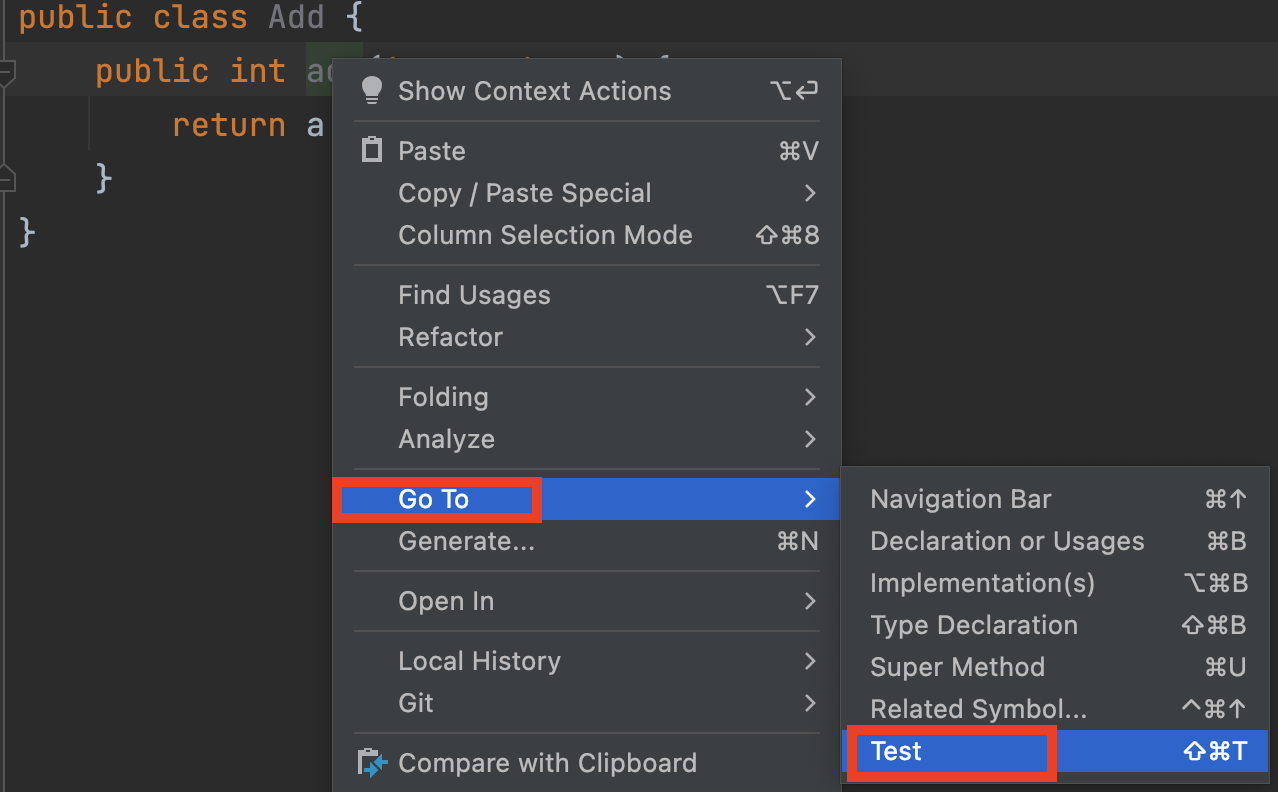

Now let's create a unit test for add(). Please move the cursor onto add(), and then right-click Go to | Test | Create New Test.

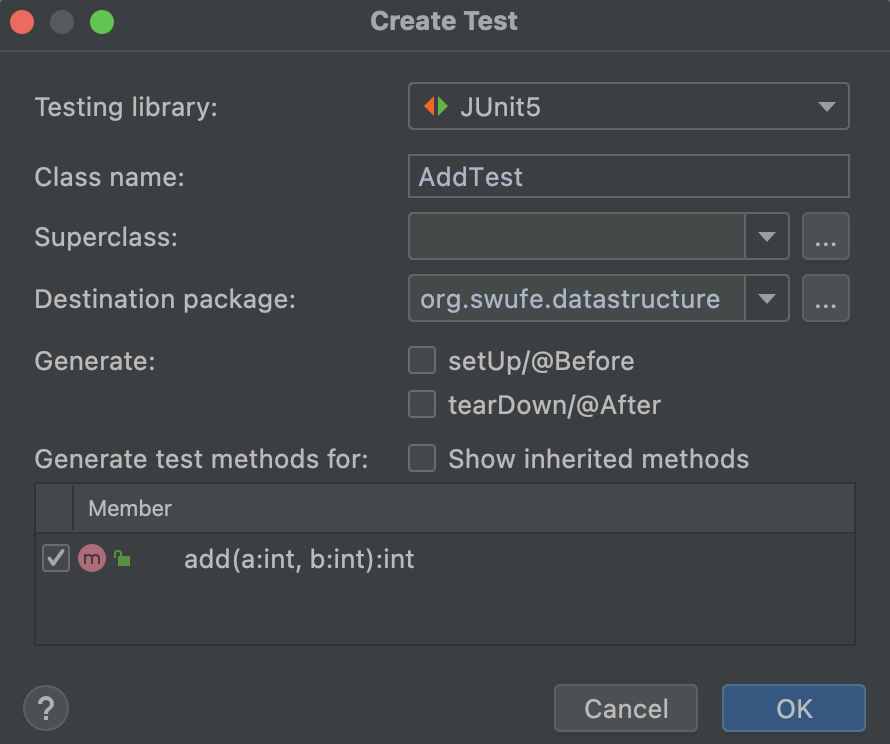

Check the add() method:

It will create an AddTest.java under the test folder for yor:

@Test

void add() {

Add a = new Add();

assertEquals(a.add(1, 2), 3);

}

We can add as many test cases as we like:

@Test

void addTwoNegatives() {

Add a = new Add();

assertEquals(a.add(-2, -2), -4);

}

A test case can be either passed or failed. If all test cases are passed, we can somewhat ensure that this program works as we expected2. Otherwise, it indicates that there are some bugs in our code, and we should fix them before moving on. The comprehensive usage of JUnit 5 is out of the scope of this book, readers can refer to A Guide to JUnit 5 for a quick start.

By the way, all assertions are enabled when executing the unit tests.

Python

Luckily, the unittest module is built with Python, so we do not have to rely on any third-party library3.

As for add.py, we can also follow the steps: move the cursor onto add(), and then right-click Go to | Test | Create New Test. Finally, it will create test_add.py for us, and we can add as many test cases as we like:

from unittest import TestCase

import unittest

from add import add

class Test(TestCase):

def test_add(self):

self.assertEqual(add(2, 1), 3)

def test_add_negatives(self):

self.assertEqual(add(-2, -2), -4)

if __name__ == '__main__':

unittest.main()

1 Part of the reason stems from the fact that assertions have multiple applications. In addition to tests, assertions also can be used to debug code.

2 Edsger W. Dijkstra said that “Program testing can be a very effective way to show the presence of bugs, but it is hopelessly inadequate for showing their absence.” That doesn’t mean we shouldn’t try to test as much as we can!

3 A third-party test library, called pytest, is more powerful than the unittest module in the standard library.

Exercise

- In CoffeeDB.java, we maintain a collection of coffees in an

ArrayList, and this class offers a simple search method:

public List<Coffee> findByName(String name) {

...

}

Your task is to complete its Python version below in coffee_db.py:

def find_by_name(self, name):

pass

HashMapanddict.

- What would you get if the key does not exist for

HashMapin Java? - What is the difference between indexing syntax and

get()to obtain a value fordictin Python?

- Please add a method to delete books by the names from a shop cart in ShopCart.java or shop_cart.py. (Hint: it does not make sense to store an item when its amount is less or equal 0.)

- Please write unit tests for naive_two_sum.py. (Hint: you can refer to the Java implementation NaiveTwoSumTest.java.)

- Please give a big O characterization in terms of

nfor each function shown in example.py.

- Perform experimental analysis to test the hypothesis that Java's

sort()or Python'ssorted()method runs in \(O(n\log{n})\) on average.

// a is a random list

List<Integer> a = Arrays.asList(1, 9, 4, 6);

Collections.sort(a);

# a is a random list

a = [1, 9, 6, 4]

a = sorted(a)

- Please give a big O characterization in terms of

nfor BinarySearch.java or binary_search.py.

- Please design experiments to compare the efficiency between fast_two_sum.py and naive_two_sum.py.

Stacks and Queues

Stacks and queues are fundamental data structures, and they are used in many applications.

By the way, as for the specific implementation, I use Python first, and then Java from now on.

Stacks

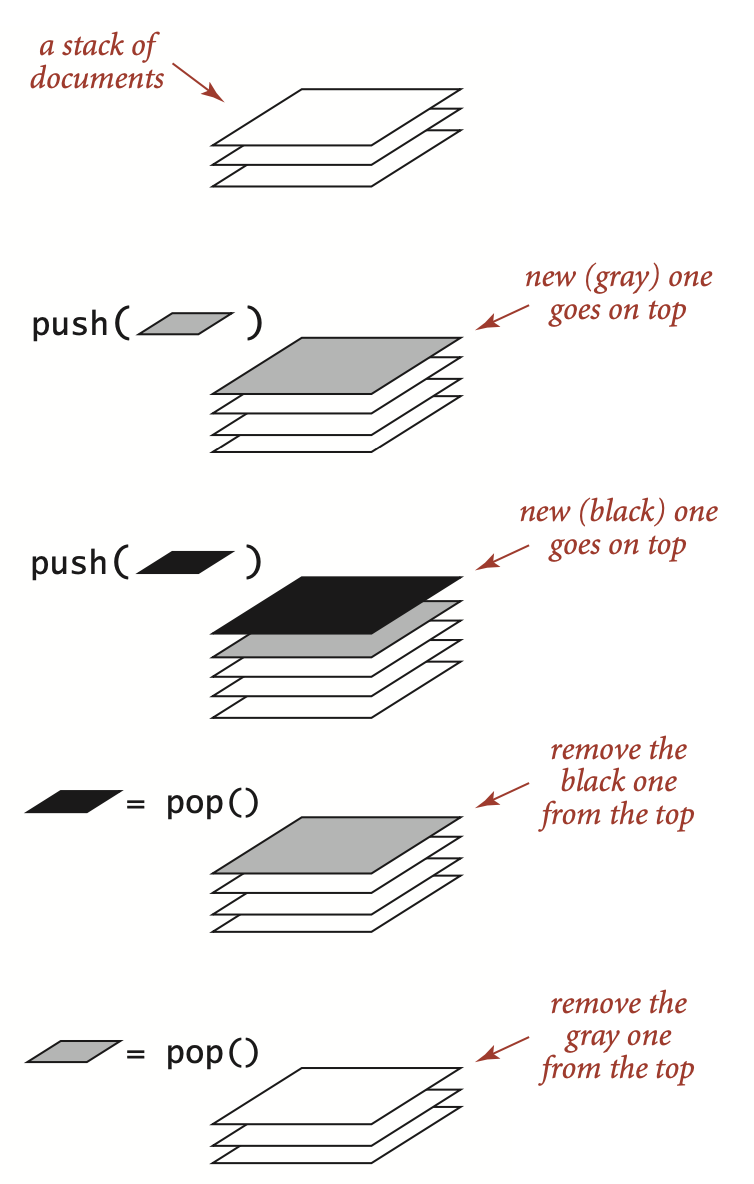

A stack is a collection of objects that are inserted and removed according to the last-in, first-out (LIFO) policy. It supports two basic operations:

push(): add a new itempop(): remove an item

It also supports two more operations sometimes: size() and isEmpty(), returning number of items in the stack, and answering is the stack empty?, respectively.

Stack: a pile of things arranged one on top of another (from Cambridge dictionary).

When you hear of stacks, you shall image a stack of documents (or plates) as illustrated in the following figure1:

The following table shows a series of stack operations and their effects on an initially empty stack S of integers:

| Operation | Stack contents |

|---|---|

| S.push(5) | [5] |

| S.push(3) | [5, 3] |

| S.pop() | [5] |

| S.pop() | [] |

| S.push(7) | [7] |

| S.push[9] | [7, 9] |

| S.push(6) | [7, 9, 6] |

| S.pop() | [7, 9] |

The importance of stacks in computing is profound. For example, the operating system would maintain a stack for variables and states when executing programs, but the detailed discussion of this topic is out of the scope of this book. Here let's consider a common example of a stack when surfing the web.

<!-- a.html -->

<a href="b.html">Next</a>

<!-- b.html -->

<a href="c.html">Next</a>

<!-- c.html -->

<a href="d.html">Next</a>

When you click a hyperlink, your browser displays the new page (and pushes on a stack). You can keep clicking on hyperlinks to visit new pages, but you can always revisit the previous page by clicking the back button (popping it from the stack). The LIFO policy offered by a stack provides just the behavior that you expect.

| Action | Contents in the stack | Where you are |

|---|---|---|

| (Start from a.html) | [] | a.html |

Click Next | [a.html] | b.html |

Click Next | [a.html, b.html] | c.html |

Click Next | [a.html, b.html, c.html] | d.html |

Click Back | [a.html, b.html] | c.html |

Click Back | [a.html] | b.html |

1 This figure is from Algorithms, 4th.

Stack In Python

Fortunately, the list in Python supports the operations required by stacks. To be specific,

append()is to add a new item at the end of the list, so it behaves exactly the same aspush().pop(). Aha! The same method name as we expect.

a = []

a.append(5)

a.append(3)

v = a.pop() # 3

v = a.pop() # 5

a.append(7)

a.append(9)

a.append(6)

v = a.pop() # 6

But, we cannot get its size and check the emptiness of a list in an object-oriented way. To this end, a better way is to encapsulate the data and related operations in a class. The following is the skeleton of Stack, and the complete code can be found at stack.py. Because the list is an array, we call the following implementation array-based stack.

class Stack:

"""A last-in, first-out (LIFO) data structure."""

def __init__(self):

self._data = []

def push(self, item):

pass

def pop(self):

pass

def size(self):

pass

def is_empty(self):

pass

Python does not support the private variables. However, there is a convention that is followed by most Python code: a name prefixed with an underscore (e.g. _spam) should be treated as a non-public part of the API.

As we can see, Stack wraps a list inside, and the implementations of these APIs are generally straightforward as long as you know the basic usage of a list.

push()is based onappend():

def push(self, item):

self._data.append(item)

size()is based on built-inlen():

def size(self):

return len(self._data)

is_empty()is to check whether its size equals 0:

def is_empty(self):

return self.size() == 0

pop()can use list'spop()directly:

def pop(self):

return self._data.pop()

However, if the underlying data is empty, then pop() will throw an exception: IndexError: pop from empty list. We can further customize the error massage to display something like Error: pop from empty stack:

def pop(self):

if self.is_empty():

raise IndexError('Pop from empty stack')

return self._data.pop()

Another common approach is to return a None when it is empty, but it would be troublesome if the item itself is a None:

def pop(self):

if self.is_empty():

return None

return self._data.pop()

A third approach is to use a self-defined exception class, and we will adopt this implementation in this book.

class NoElement(Exception):

"""Error attempting to access an element from an empty collection."""

pass

def pop(self):

if self.is_empty():

raise NoElement('Pop from empty stack!')

return self._data.pop()

Iterator

By now you have probably noticed that most container objects can be looped over using a for statement:

a = [1, 9, 4, 9]

for i in a:

print(i)

Why can we use for to iterate the list? The iterator behind the scenes plays the magic1! See more at Iterators.

It is often a good practice to prepare a single class as the iterator which offers the __next__() method:

class ReverseListIterator:

def __init__(self, data):

self._data = data

self._i = len(data)

def __next__(self):

if self._i == 0:

raise StopIteration

self._i -= 1

return self._data[self._i]

And Stack's __iter__() method returns an instance of the iterator:

def __iter__(self):

return ReverseListIterator(self._data)

We can also use the built-in reversed() iterator:

def __iter__(self):

return reversed(self._data)

In this way, we can iterate the stack as we do for other collections:

s = Stack()

s.push(1)

s.push(2)

s.push(3)

for i in s:

print(i)

Time complexity

The following table summarizes the array-based stack's time complexity:

| Operation | Running time |

|---|---|

| push() | \(O(1)\) |

| pop() | \(O(1)\) |

| is_empty() | \(O(1)\) |

| size() | \(O(1)\) |

As we can see, all APIs are with a constant time complexity.

Note that the time complexity of push() and pop() is amortized. Roughly speaking, it means \(O(1)\) happens most of time, but it may have a larger time complexity only once a while2; and on average, the worst complexity is still \(O(1)\).

Application (1): matching parentheses

In this subsection, we explore an application of stacks by considering matching parentheses in arithmetic expressions. Here we assume that only parentheses (), braces {}, and brackets [] are allowed. This application is also found at LeetCode.

Clearly, each opening symbol must match its corresponding closing symbol. For example:

- Correct:

()(()){([()])} - Incorrect:

({[])}

To what follows, we are going to design an algorithm to check whether an expression is matched in terms of parentheses:

def is_matched(expr):

"""

Check whether an expression is matched in terms of parentheses

:param expr: an expression with parentheses

:return: True if matched; False otherwise

"""

pass

The idea of this algorithm can be described in plain English:

- Scan the expression for left to right.

- If the character belongs to openings (i.e.,

([{), it is pushed on a stack. - If the character belongs to the closings (i.e.,

)]}), an item popped from the stack will be checked whether it is matched with the character or not. If so, continue to scan; otherwise, returnFalse. - After scanning, if the stack is empty, return

True; otherwise, returnFalse.

The steps above can be translated into the code:

def is_matched(expr):

openings = '([{'

closings = ')]}'

s = Stack()

for c in expr:

if c in openings:

s.push(c)

elif c in closings:

if s.is_empty():

return False

if closings.index(c) != openings.index(s.pop()):

return False

return s.is_empty()

Application (2): arithmetic expression evaluation

As another example3 of a stack, let's consider the basic arithmetic expression evaluation. For example,

(1 + ((2 + 3) * (4 * 5)))

If you multiply 4 by 5, add 3 to 2, multiply the result, and then add 1, you get the value 101. How to this calculation using Python?

For simplicity, we begin with the following explicit recursive definition: an arithmetic expression is either a number, or a left parenthesis (i.e., () followed by an arithmetic expression followed by an operator followed by another arithmetic expression followed by a right parenthesis (i.e., )). In other words, we rely on parentheses instead of precedence rules.

For specificity, we support the familiar binary operators *, +, -, and /. A remarkably simple algorithm that was developed by E. W. Dijkstra in the 1960s uses two stacks (one for operands and one for operators) to do this job. Again, the idea of this algorithm can be described in plain English when scanning the expression according to four possible cases:

- Push operands onto the operand stack.

- Push operators onto the operator stack.

- Ignore left parentheses.

- On encountering a right parenthesis, pop an operator, pop the operands, and push onto the operand stack the result of applying the operator to those operands.

After the final right parenthesis has been processed, there is one value on the stack, which is the value of the expression. For example, the algorithm computes the same value for all of these expressions:

( 1 + ( ( 2 + 3 ) * ( 4 * 5 ) ) )

( 1 + ( 5 * ( 4 * 5 ) ) )

( 1 + ( 5 * 20 ) )

( 1 + 100 )

101

For simplicity, the code assume that the expression is fully parenthesized, with numbers and characters separated by whitespace:

def compute(expr: str) -> float:

"""

Dijkstra's two-stack algorithm for expression evaluation.

:param expr: an arithmetic expression

:return: computed value

"""

ops = Stack()

vals = Stack()

expr = expr.split(' ')

for c in expr:

if c == '(':

continue

elif c in '+-*/':

ops.push(c)

elif c == ')':

v = vals.pop()

op = ops.pop()

if op == '+':

v = vals.pop() + v

elif op == '-':

v = vals.pop() - v

elif op == '*':

v = vals.pop() * v

elif op == '/':

v = vals.pop() / v

vals.push(v)

else:

vals.push(float(c))

return vals.pop()

1 It will call __getitem__() instead if __iter__() is not available.

2 It is because of the resizing of an array.

3 This example comes from Algorithms, 4th.

Stack In Java

Java has a built-in library called

java.util.Stack, but you should avoid using it when you want a stack. It has several additional operations that are not normally associated with a stack, e.g., getting the ith element.

Stack based on ArrayList

Our first stacks are based on ArrayList, and the overall implementation is very similar to the one based on the list in Python. An upfront note is that generics are preferred for collections in Java, so the class is declared like:

public class ArrayListStack<Item> {

private List<Item> elments;

public ArrayListStack() {

elments = new ArrayList<>();

}

...

}

Some students may still get confused about the motivation of adopting the generics. It is very good feature in static languages (e.g., Java), because it will enforce types checking in the compiling stage. To put it in another way, it can forbid us mixing apples with oranges. In contrast, Python lacks such a useful feature, so the error-prone code is valid in Python:

s = Stack()

s.push(1)

s.push('apple')

Now let's go back to the Java's implementation. As we can see, ArrayListStack wraps an array list inside, and the implementations of these APIs are generally straightforward as long as you know the basic usage of an ArrayList.

push()is based onadd():

public void push(Item item) {

elements.add(item);

}

size()andisEmpty()have the methods with the same name:

public int size() {

return elements.size();

}

public boolean isEmpty() {

return elements.isEmpty();

}

pop()is based onremove(), and it will returnnullif the stack is empty:

public Item pop() {

if (isEmpty()) {

return null;

}

Item v = elements.get(size() - 1);

elements.remove(size() - 1);

return v;

}

Another common approach is to throw an exception if the stack is empty, and we adopt this implementation in this book:

if (isEmpty()) {

throw new NoSuchElementException("Pop from empty stack!");

}

Iterator

Iterators play the magic behind the scenes when we use the enhanced for loop.

In this subsection, we are going to make the following code valid:

ArrayListStack<String> stack = new ArrayListStack<>();

...

for (String s : stack) {

System.out.println(s);

}

To make a class iterable, the first step is to add the phrase implements Iterable to its declaration, matching the interface

public interface Iterable<Item> {

Iterator<Item> iterator();

}

So, the signature of the class becomes

public class ArrayListStack<Item> implements Iterable<Item>

It is often a good practice to prepare a single class to implement Iterator, where at least next() and hasNext() should be implemented1. Also, this concrete iterator is designed as an inner class, so it can access its parent class directly2.

private class ReverseArrayListIterator implements Iterator<Item> {

private int i = elements.size();

@Override

public boolean hasNext() {

return i > 0;

}

@Override

public Item next() {

return elements.get(--i);

}

}

The complete code can be found at ArrayListStack.java.

By the way, Iterable has offered a default forEach() API since 1.8, so the print code can be shortened to

stack.forEach(System.out::println);

Application: matching parentheses & arithmetic expression evaluation

Readers can try to implement the client code using ArrayListStack for these two applications, and this is left as an exercise.

Stack based on array

In Stack in Python, we summarized the time complexity of the operations of a stack:

| Operation | Running time |

|---|---|

| push() | \(O(1)\) |

| pop() | \(O(1)\) |

| is_empty() | \(O(1)\) |

| size() | \(O(1)\) |

We also pointed out that as for push() and pop(), the worst time is is amortized because it may have a bad time cost once a while. Now let's explore what happens exactly.

First, both ArrayList in Java and list in Python are based on arrays whose size are fixed. So how can we push() a new item onto it when it is full? The solution is to resize the array and copy the items to a new array3. To understand this, we shall introduce two different concepts:

- size: how many items are there in the collection?

- capacity: how many items can this collection hold at most without resizing?

List<String> list = new ArrayList<>();

The size of list is 0, and the default capacity is 10. So, even if you do not add any item into this list, it has an array with size of 10 inside. And after adding 10 items, the underlying array will be resized to a larger one in order to hold more data. During the resizing, a copying operation is required, so it can result in a bad running time once a while.

Therefore, if we would like to use arrays to hold the data for stacks, we shall deal with the resizing manually. The complete code can be found at ArrayStack.java.

private void resize(int capacity) {

assert capacity >= n;

a = Arrays.copyOf(a, capacity);

}

1 In Python, the __next__() can be regarded as the combination of next() and hasNext() here.

2 Python's inner class does support this feature.

3 The resizing operation also happens when the size is much smaller than the capacity.

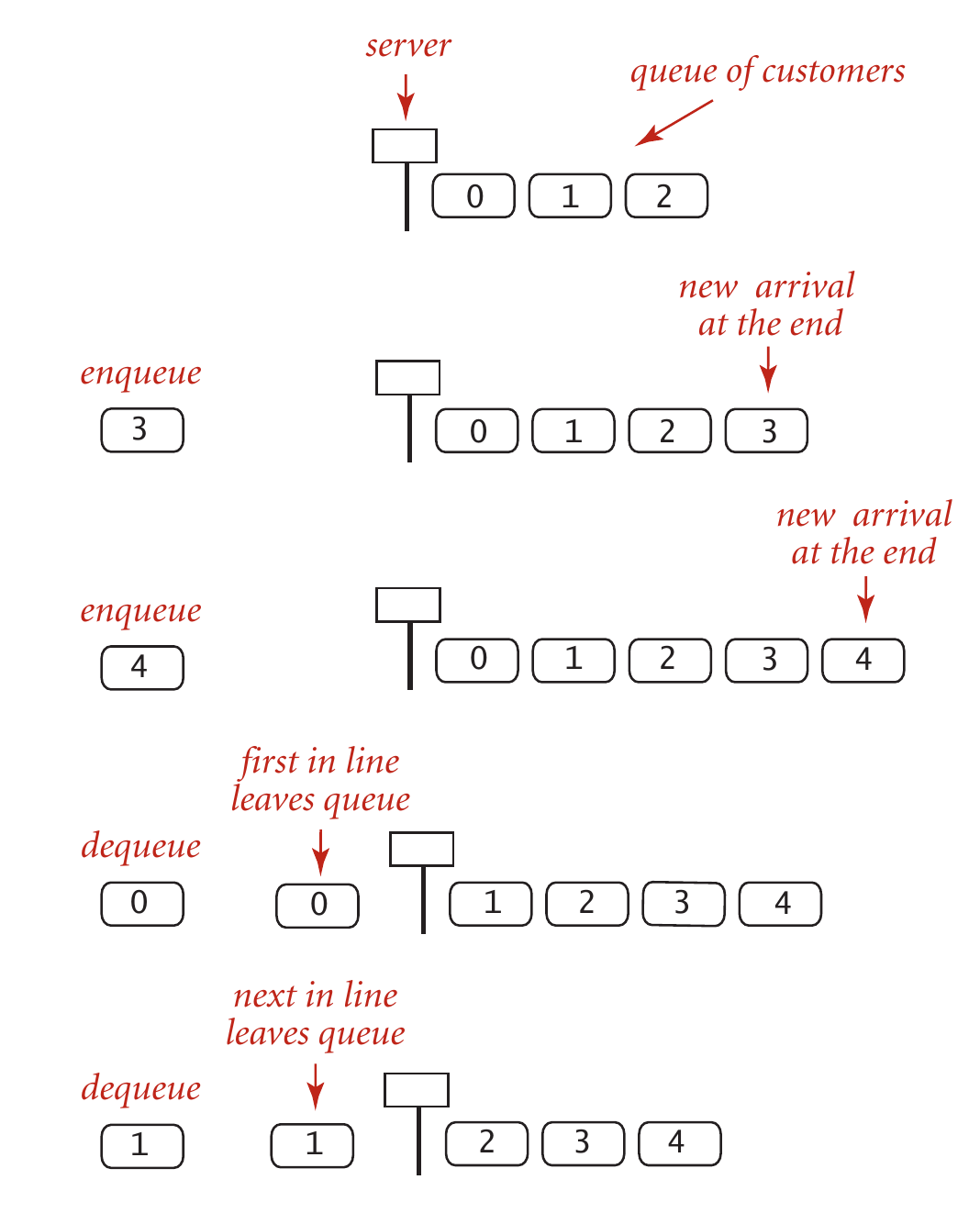

Queues

Different from stacks, a queue is a collection of objects that are inserted and removed according to the first-in, first-out (FIFO) policy. It supports two basic operations:

enqueue(): add a new itemdequeue(): remove an item

It also supports two more operations sometimes: size() and isEmpty(), returning number of items in the queue, and answering is the queue empty?, respectively.

Queue: a line of people, usually standing or in cars, waiting for something (from Cambridge dictionary).

When you hear of queues, you shall image a line of people waiting for the service before a counter as illustrated in the following figure1:

As a running example of queues, let's consider what happens when you are calling 10086. Suppose there is only one telephone operator, and she is free now:

| Action | Contents in the queue | Who is being served |

|---|---|---|

| Bob is calling | [] | Bob |

| Alice is calling | [Alice] | Bob |

| Jack is calling | [Alice, Jack] | Bob |

| Mike is calling | [Alice, Jack, Mike] | Bob |

| Bob ends his calling | [Jack, Mike] | Alice |

| Mary is calling | [Jack, Mike, Mary] | Alice |

| Alice ends her calling | [Mike, Mary] | Jack |

| Jack ends his calling | [Mary] | Mike |

1 This figure is from Algorithms, 4th.

Queues in Python

An implementation of our Queue API based on the list data structure is also straightforward.

class Queue:

"""A first-in, first-out (FIFO) data structure."""

def __init__(self):

self._data = []

def size(self):

return len(self._data)

def is_empty(self):

return len(self._data) == 0

def enqueue(self, item):

self._data.append(item)

def dequeue(self):

if self.is_empty():

raise NoElement('Dequeue from empty queue!')

return self._data.pop(0)

Note that the dequeue() is based on pop() with index 0 as the parameter, meaning popping the first element from the list.

Iterator

The iteration over a queue is like iterating a list, so we can reuse the iterator of the list:

def __iter__(self):

return iter(self._data)

We do not have to implement the __next__ method manually. By taking leveraging of iterators, we can use the following for statement:

q = Queue()

q.enqueue(1)

q.enqueue(9)

q.enqueue(4)

q.enqueue(9)

for i in q:

print(i)

The complete code can be found at queue.py.

Time complexity

The following table summerizes the queue's time complexity:

| Operation | Running time |

|---|---|

| enqueue() | \(O(1)\) |

| dequeue() | \(O(N)\) |

| is_empty() | \(O(1)\) |

| size() | \(O(1)\) |

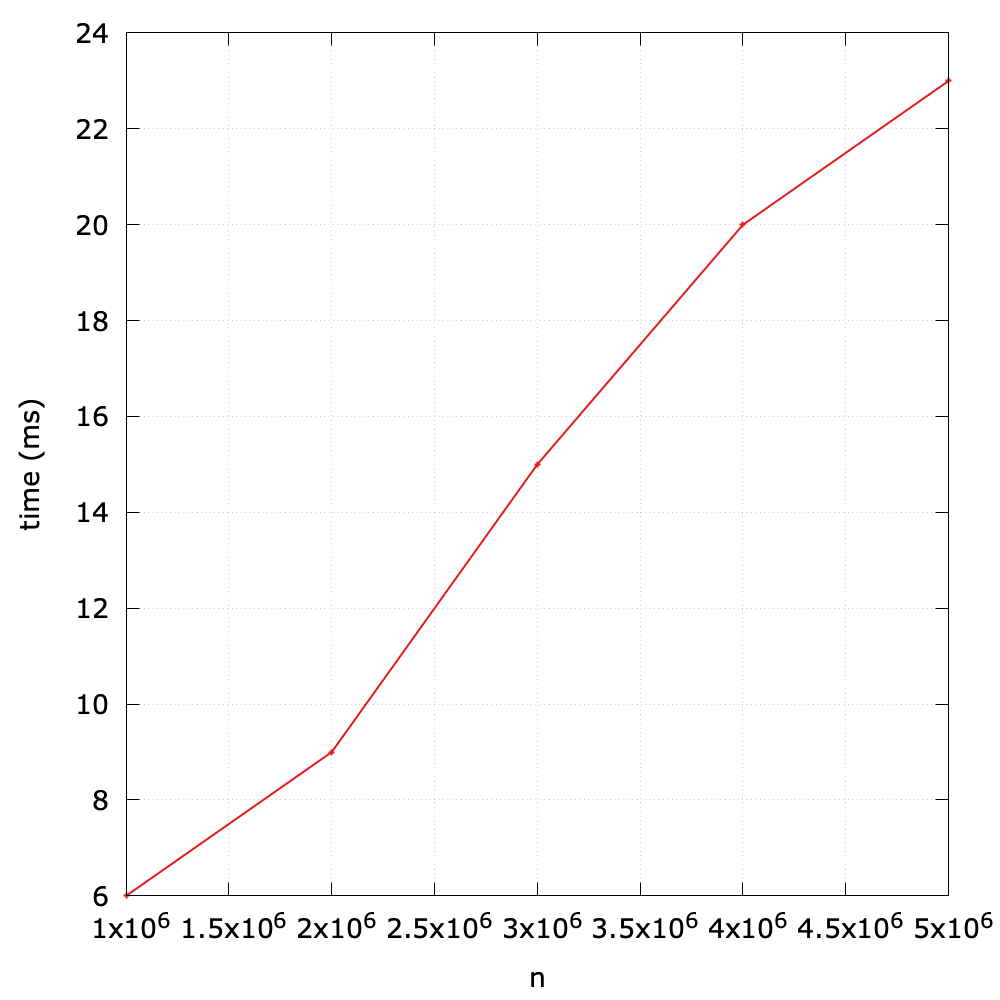

As we can see, most operations, except for dequeue() are with constant time complexity1, so it is very efficient. However, the dequeue() is inefficient as it grows linearly with respect to the size of the queue (i.e., N).

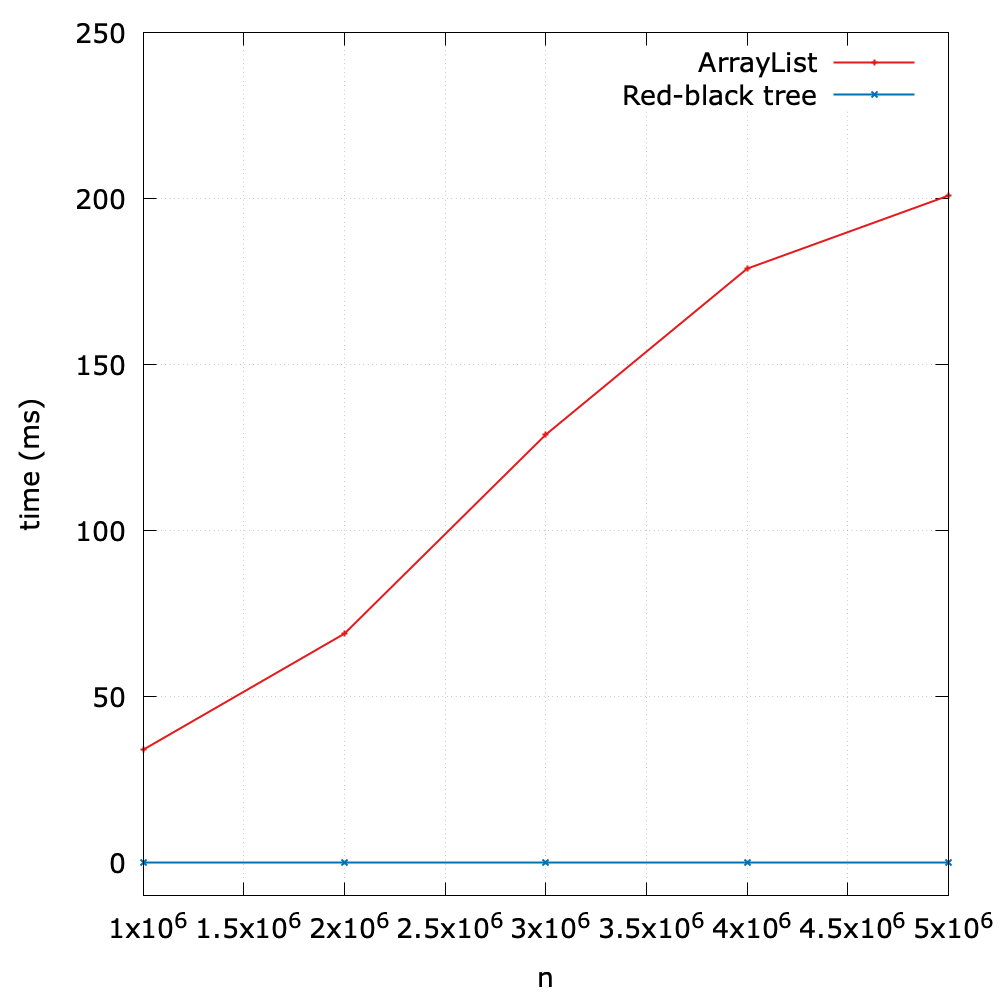

To validate this theoretical analysis, I design a simple benchmark to test the performance (see queue_vary_size() method in benchmark.py). The main idea is summarized in the following:

- Initialize queues with different size (1000000, 2000000, 3000000, 4000000, 5000000).

- Perform the

dequeue()operation, and time it2.

As we expect, the running time is roughly linear with respect to the size of the queue.

Now let's explore the reason of the inefficiency. Since the underlying data structure is a list, when we call enqueue() on it, the first item will be removed and the next N-1 items will be shifted to the left. Clearly, this moving action will result in \(O(N)\). Of course this design still achieves an acceptable performance in applications in which queues have relatively modest size, but when it comes to a large amount of data, it should be improved.

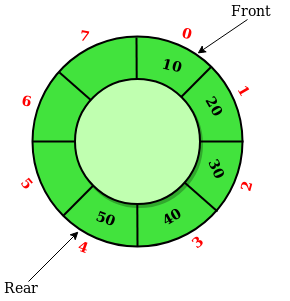

Using an array circularly

This subsection is adapted from Circular Queue. Such structure is also known as ring buffer in many applications.

As illustrated in the figure above, we shall maintain a front pointer (i.e., index) to point to the first item and use rear pointer (i.e., index) to point to next available position3. Assume this circular array is large enough to hold all items:

enqueue(): update rear to next one in the clock-wise.dequeue(): update front to next one in the clock-wise.

Now let's consider a running example to understand the principle of circular arrays. Suppose the size of the array is 8, and the queue q is empty at start, and the front is 0:

| Operation | Front | Rear | Size |

|---|---|---|---|

| (Start) | 0 | 0 | 0 |

| q.enqueue(10) | 0 | 1 | 1 |

| q.enqueue(20) | 0 | 2 | 2 |

| q.enqueue(30) | 0 | 3 | 3 |

| q.dequeue() | 1 | 3 | 2 |

| q.enqueue(40) | 1 | 4 | 3 |

| q.enqueue(50) | 1 | 5 | 4 |

| q.dequeue() | 2 | 5 | 3 |

Obviously, the dequeue() operation only results in a pointer shift, so the time complexity is \(O(1)\). What a clever design! In addition, we can find that the specific pointer (i.e., index) does not really matter, so we can maintain front and size instead, and the rear can be computed based on them.

class CircularQueue:

"""A queue based on a circular array."""

DEFAULT_CAPACITY = 10

def __init__(self):

self._data = [None] * CircularQueue.DEFAULT_CAPACITY

self._size = 0

self._front = 0

Then, what if we call enqueue() when front is 6 and size is 1? In this case, the implicit rear will become 0 because (7 + 1) mod 8 = 0. The mod operation makes the pointer in arrays be circular.

It is only circular conceptually, and it is still a linear array. The implementation shares many ideas with Stack based on array in Stacks in Java.

A final problem to be addressed is to resize. As we have learned in Stacks in Java, we shall resize the underlying array if the size reaches to its capacity.

def enqueue(self, item):

# expand

if self.size() == len(self._data):

self._resize(2 * len(self._data))

avail = (self._front + self.size()) % len(self._data)

self._data[avail] = item

self._size += 1

We always double the array's size when the size of a queue reaches to its capacity. avail, computed through a mod operation, means, in fact, rear, the available position in the array.

def dequeue(self):

if self.is_empty():

raise NoElement('Dequeue from empty queue!')

# shrink (optional)

if self.size() <= len(self._data) // 4:

self._resize(len(self._data) // 2)

answer = self._data[self._front]

self._data[self._front] = None

self._front = (self._front + 1) % len(self._data)

self._size -= 1

return answer

The shrink operation is optional to save the memory space. Again, we update front through a mod operation. When the pointer shifts, it is a good practice to let it point to None for the sake of garbage collection.

The resize operation is a private method, and it is worthwhile to investigate how it works:

def _resize(self, capacity):

assert capacity > self.size()

old = self._data

self._data = [None] * capacity

walk = self._front

for i in range(self._size):

self._data[i] = old[walk]

walk = (1 + walk) % len(old)

self._front = 0

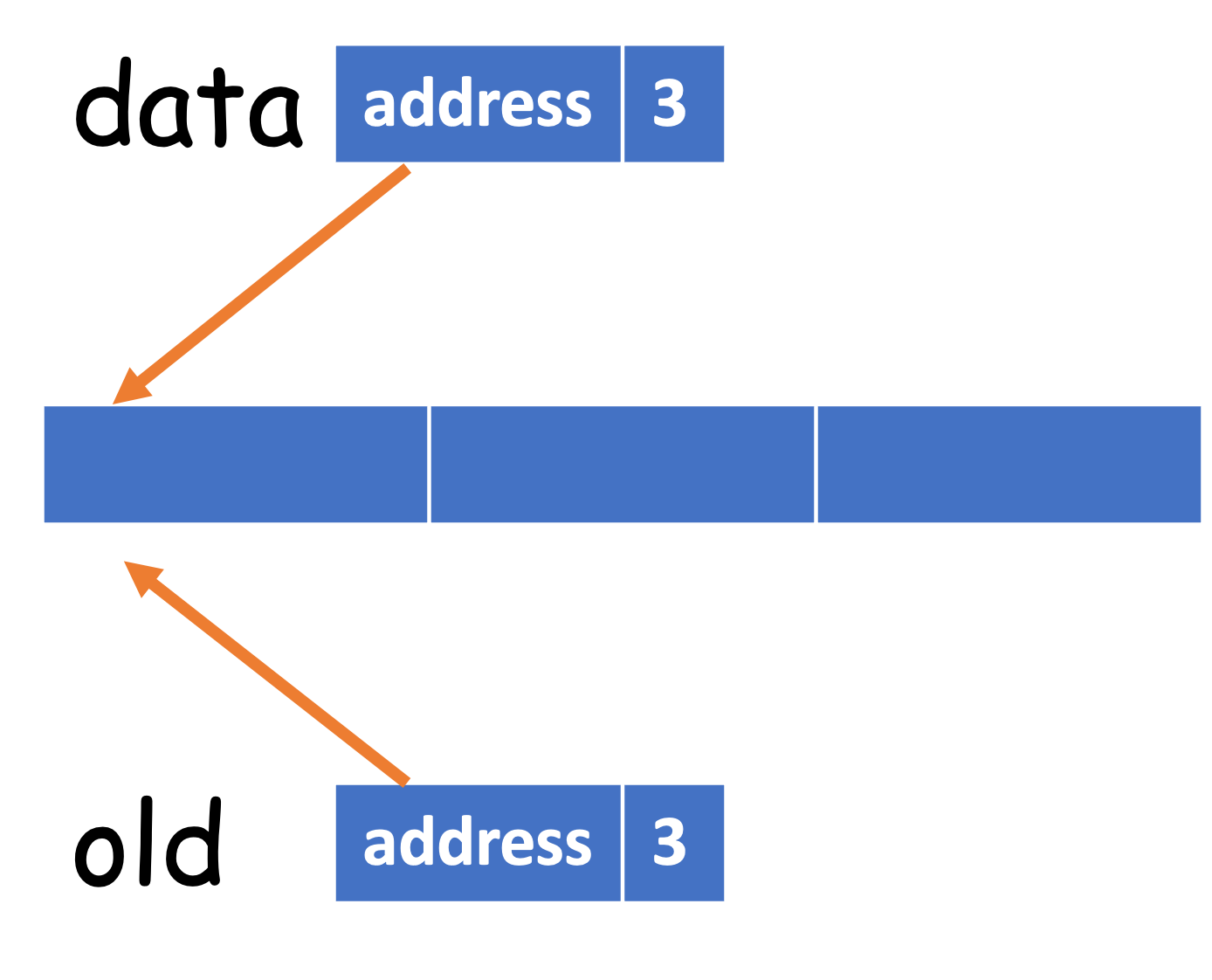

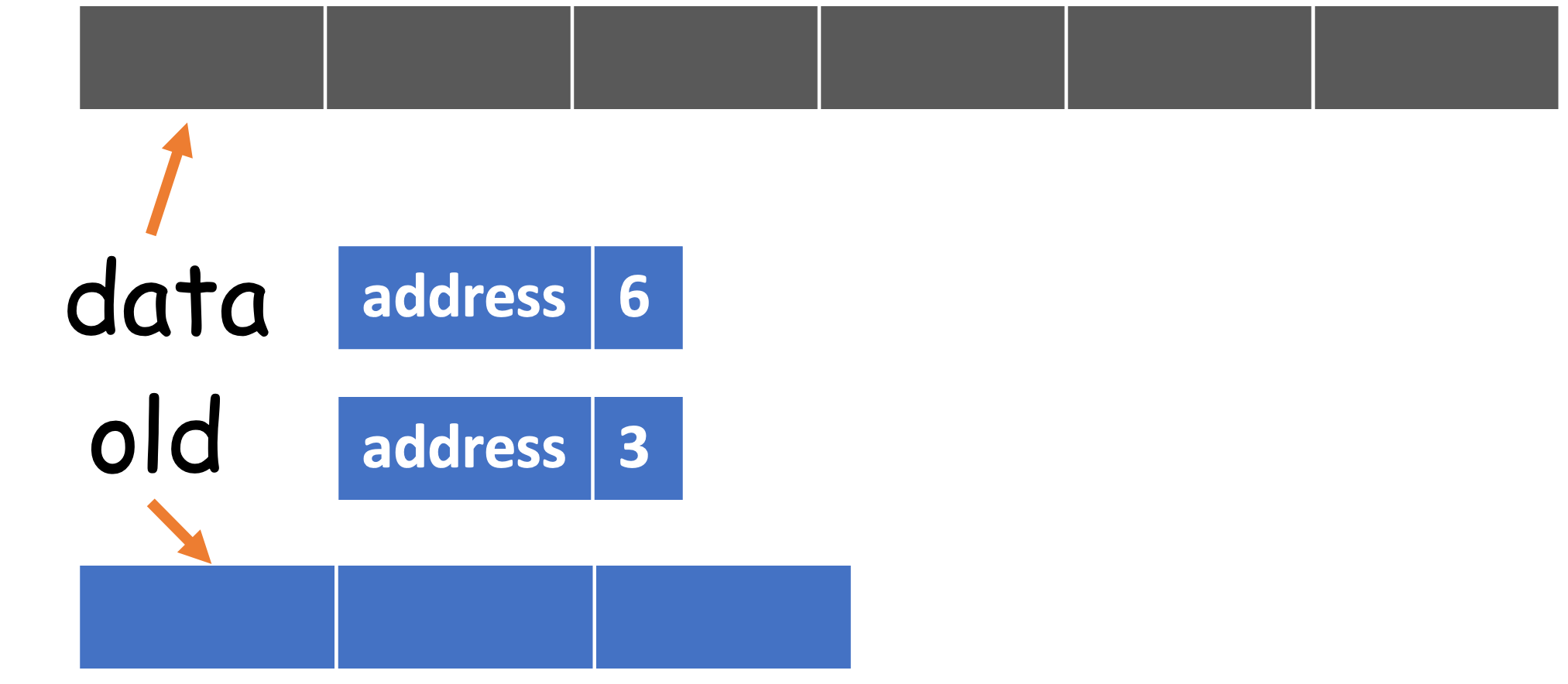

To understand it, we shall have a basic knowledge about the object model in Python:

When we create a list data, we essentially create a list and assigns the reference to data. So data is not the list itself, and it roughly has two parts: the address pointing to the list, and the size of the list.

data = [1, 2, 3]

old = data

If we add a new item to old, then data will also be updated:

old.append(4)

# [1, 2, 3, 4]

print(data)

Therefore, old and data refer to the same object, but with different names4. Now let's revisit the implementation of _resize(). After self._data = [None] * capacity is called, it becomes

The next step is to copy from old[front..rear] to data[0.._size]. Of course, the range from front to near may be circular, so it should be computed through the mod operation.

The complete code can be found at circular_queue.py. Please try to implement an iterator for circular queues on your own before checking the code in GitHub.

A few notes on queues

When you need queues, please consider collections.deque, instead of implementing a new one from the scratch.

1 Like stacks, the time complexity of enqueue() is amortized.

2 To avoid the randomness brought by a single operation, we repeat it 20 times. Since 20 is much smaller than the size of queue, the measured time still makes sense.

3 Some designs assume that rear points to the last item in a queue. In this case, rear is -1 when the queue is empty.

4 https://towardsdatascience.com/python-memory-and-objects-e7bec4a2845

Queues in Java

An implementation of our Queue API based on the ArrayList data structure is also straightforward, and this is left as an exercise.

Using an array circularly

The queues based on the ArrayList also suffers from the bad performance caused by enqueue(). Therefore, we will use an array circularly as the underlying data structure.

public class CircularArrayQueue<Item> implements Iterable<Item> {

private static final int DEFAULT_CAPACITY = 10;

private Item[] a;

private int n; // number of elements in queue

private int front;

...

}

Once you have understood how the circular array works, you can translate the Python implementation to its Java counterpart with ease. For example,

public void enqueue(Item item) {

if (n == a.length) {

resize(2 * a.length);

}

int avail = (front + n) % a.length;

a[avail] = item;

n += 1;

}

public Item dequeue() {

if (isEmpty()) {

throw new NoSuchElementException("Dequeue from empty queue!");

}

if (n <= a.length / 4) {

resize(a.length / 2);

}

Item answer = a[front];

a[front] = null;

n -= 1;

front = (front + 1) % a.length;

return answer;

}

Except for the syntax itself, they are the same exactly! The complete code can be found at CircularQueue.java.

An experimental evaluation

According to the theoretical analysis, the time complexity of dequeue() of circular queues is \(O(1)\). To what follows, I design a small benchmark to evaluate its performance (Benchmark.java).

We initialize the size in the range of [10000000, 20000000, 30000000, 40000000, 50000000], and we find that no matter how the size changes, the measured is always 0! It is very fast with a constant time complexity.

A few notes on Queue interface

When you need queues, please have a look at the implementing classes of Queue interface, instead of implementing a new one from the scratch.

Java offers the Queue interface, which has the following methods:

add(): Inserts the specified element into this queue if it is possible to do so immediately without violating capacity restrictions, returning true upon success and throwing an IllegalStateException if no space is currently available.remove(): Retrieves and removes the head of this queue.

These two methods would throw exceptions. In contrast, it also provides a pair of APIs to return special value:

offer(): Inserts the specified element into this queue if it is possible to do so immediately without violating capacity restrictions.poll(): Retrieves and removes the head of this queue, or returns null if this queue is empty.

To use a queue, we often need to choose one of its implementing classes, such as ArrayDeque, LinkedList. For example,

Queue<String> q = new LinkedList<>();

q.offer("data");

q.offer("structure");

q.offer("is");

q.offer("fun");

assert Objects.equals(q.poll(), "data");

assert Objects.equals(q.poll(), "structure");

Although we have not learned LinkedList in detail yet, we are still able to use its public APIs1 because the interface makes such promise.

1 LinkedList is very complex by offering plenty of APIs.

Deques

In this section, we consider a queue-like data structure that supports insertion and deletion at both the front and the back of the queue. Such a structure is called a double-ended queue, or deque, which is usually pronounced deck to avoid confusion.

The deque collection is more general than both the stack and queue. The extra generality makes it appealing in many applications. Let's consider the analogy that people are calling 10086, and it is often described as a queue. But in reality, people who are at the end of the queue may become impatient and leave the queue (delete_last()). Besides, someone who is a VIP can jump the queue (add_first()) without long time waiting.

A deque supports the following methods:

add_first(): Add a new element to the front of a dequeadd_last(): Add a new element to the end of a dequedelete_first(): Remove and return the first element from a dequedelete_last(): Remove and return the last element from a deque

Additionally, it will include the following accessors:

first(): Return (but not to remove) the first element of a dequelast(): Return (but not to remove) the last element of a dequeis_empty(): ReturnTrueif a deque does not contain any elementsize(): Return the number of elements in a deque

Deques in Python

Luckily, collections.deque has been designed in Python, which have fast appends and pops from both ends.

from collections import deque

q = deque(['structures', 'is'])

q.append('fun') # add_last

q.append('!') # add_last

q.appendleft('data') # add_first

q.pop() # delete_last

print(q)

q.popleft() # delete_first

print(q)

As we can see, append() is to add to the end, while appendleft() is to add to the front; pop() is to remove the last, while popleft() is to remove the first.

Deques based on circular arrays

In practice, collections.deque is always your top choice. But as for learning, it is meaningful and interesting to implement a deque on our own. The complete code can be found at array_deque.py.

class ArrayDeque:

"""A double-ended queue (deque) based on circular queues."""

DEFAULT_CAPACITY = 10

def __init__(self):

self._data = [None] * ArrayDeque.DEFAULT_CAPACITY

self._size = 0

self._front = 0

Clearly, add_last() is exactly the same with enqueue(), and remove_first() is exactly the same with dequeue().

As for as_first() and delete_last(), we rely on mod to circularly decrement the index1:

def add_first(self, item):

if self.size() == len(self._data):

self._resize(2 * len(self._data))

self._front = (self._front - 1) % len(self._data)

self._data[self._front] = item

self._size += 1

def delete_last(self):

if self.is_empty():

raise NoElement('Remove from empty queue!')

if self.size() == len(self._data) // 4:

self._resize(len(self._data) // 2)

back = (self._front + self._size - 1) % len(self._data)

answer = self._data[back]

self._data[back] = None

self._size -= 1

return answer

Application: number of recent calls

This application is from leetcode, and readers shall check the question description by themselves.

As for this problem, one feasible algorithm is to use deque:

from collections import deque

class RecentCounter:

def __init__(self):

self.data = deque()

def ping(self, t: int) -> int:

while len(self.data) > 0 and (t - 3000) > self.data[0]:

self.data.popleft()

self.data.append(t)

return len(self.data)

1 -1 mod n = n - 1.

Deques in Java

JDK is shipped with the Deque interface as well as many useful implementing classes, including ArrayDeque, LinkedList. In this section, we use ArrayDeque as the running example, which is a resizable-array implementation of the Deque interface. Deque defines methods to access the elements at both ends of the deque.

Deque<String> deque = new ArrayDeque<>();

deque.offerLast("structures");

deque.offerLast("is");

deque.offerFirst("data");

deque.offerLast("fun");

assert Objects.equals(deque.pollFirst(), "data");

assert Objects.equals(deque.peekLast(), "fun");

The implementation based on circular arrays by yourself is left as an exercise.

If you need deques in your project, please choose one of the implementing classes from JDK, instead of designing a new one from the scratch.

Application: number of recent calls

This application is from leetcode, and readers shall check the question description by themselves.

As for this problem, one feasible algorithm is to use ArrayDeque:

import java.util.ArrayDeque;

import java.util.Deque;

public class RecentCounter {

private final Deque<Integer> data;

public RecentCounter() {

data = new ArrayDeque<>();

}

public int ping(int t) {

while (!data.isEmpty() && (t - 3000) > data.peekFirst()) {

data.pollFirst();

}

data.offerLast(t);

return data.size();

}

}

Exercise

- As for stacks, another operation

top()(also known aspeek()) is often used. It works likepop(), but it will keep the item in the stack. Please implementpeek()method in Java or Python.

- In stack.py, we would often expect that

len()can be used directly like other collections in Python:

s = Stack()

print(len(s))

Please make it possible for Stack.

Hint: you can refer to Python __len__() Magic Method.

- In evaluate.py,

ifstatement is used to determine the evaluation logic:

if op == '+':

v = vals.pop() + v

elif op == '-':

v = vals.pop() - v

elif op == '*':

v = vals.pop() * v

elif op == '/':

v = vals.pop() / v

Please try to refactor via operator module to simplify the code.

Hint: you can refer to Turn string into operator.

- The current implementation for Dijkstra's two-stack algorithm for expression evaluation (evaluate.py, Evaluate.java) only support

+,-,*, and/. Please add thesqrtoperator that takes just one argument.

v = evaluate.compute('( ( 1 + sqrt ( 5.0 ) ) / 2.0 )')

print(v)

- Please implement matching parentheses and arithmetic expression evaluation based on ArrayListStack.java using Java respectively.

- The theoretical analysis shows that the time complexity of

dequeue()of circular queues is \(O(1)\). Please design experiments and visualize your results to validate this theoretical cost based on circular_queue.py.

- The built-in

id()function returns the memory address, and You can also check if two variables are pointing to the same object usingisoperator. Try to use those two methods to validateaandbpoint to the same object:

a = [1, 2, 3]

b = a

- Please implement a queue based on

ArrayListin Java.

- As for queues, another operation

first()(also known aspeek()) is often used. It works likedequeue(), but it will keep the item in the queue. Please implementpeek()method in Java or Python based on circular queues.

- Please implement a deque based on circular arrays in Java.

- Postfix notation is an unambiguous way of writing an arithmetic expression without parentheses. It is defined so that if "(exp1)op(exp2)" is a normal fully parenthesized expression whose operation is

op, the postfix version of this is "exp1 exp2 op".

So, for example, the postfix version of "((5 + 2) * (8 − 3))/4" is "5 2 + 8 3 − * 4 /". Describe an algorithm to evaluate an expression in postfix notation. For simplicity, the numbers and operators are separated with a space.

Lists

Lists are fundamental data structures, and we have used its array based version (e.g., ArrayList in Java and list in Python) in the daily programming tasks. What's more, the previous stacks, queues and deques are also in fact based on a list.

In this chapter, we will investigate lists in a comprehensive way. Particularly, a challenging structure called linked list will be introduced.

The List ADT

In this section, we will formally introduce an important concept:

Abstract Data type (ADT) is a type (or class) for objects whose behavior is defined by a set of values and a set of operations.

So, the previous introduced stacks, queues, and deques all are ADTs. You do not need to know how a data type is implemented in order to be able to use it. Note that the definition of ADT only mentions what operations are to be performed but not how these operations will be implemented. For example, a queue based on ArrayList and a queue based on circular arrays are two different implementations of the queue ADT.

The list ADT represent an ordered collection containing linear sequence of elements, but with more general support for adding or removing elements at arbitrary positions. Since it is linear, locations within a list ADT are easily described with an integer index.

With that said, Java defines a general interface, java.util.List, that includes the following useful methods1 (and more):

add(e): Appends the specified element to the end of this list.add(i, e): Inserts the specified element at the specified position in this list.contains(o): Returns true if this list contains the specified element.get(i): Returns the element at the specified position in this list.indexOf(o): Returns the index of the first occurrence of the specified element in this list, or -1 if this list does not contain the element.isEmpty(): Returns true if this list contains no elements.lastIndexOf(o): Returns the index of the last occurrence of the specified element in this list, or -1 if this list does not contain the element.remove(i): Removes the element at the specified position in this list.remove(o): Removes the first occurrence of the specified element from this list, if it is present.set(i, o): Replaces the element at the specified position in this list with the specified element.size(): Returns the number of elements in this list.

ArrayList is a well known implementing class of List interface, and you can play with those methods on it.

Revisit Python's list

Now let's revisit the methods provided by Python's list, and then compare them with their Java's counterparts.

Python's

listis very closely related to theArrayListclass in Java.

| Java's List | Python's list |

|---|---|

| add(e) | append(e) |

| add(i, e) | insert(i, e) |

| contains(o) | in |

| get(i) | [i] |

| indexOf(o) | index(o) |

| isEmpty() | built-in len() |

| lastIndexOf(o) | * |

| remove(i) | pop(i) |

| remove(o) | remove(o) |

| set(i, o) | [i] |

| size() | built-in len() |

The implementation marked with * is left as an exercise.

1 Most of them are index-based.

ArrayList

One popular implementation of the list ADT is array based, which we have used it before. So, what is an array exactly?

An array is a linear data structure that collects elements of the same data type and stores them in contiguous and adjacent memory locations.

There are two key points about elements in an array:

- The same type.

- Contiguous in memory.

By this definition, Python's list is not an array1 because it can hold elements with mixing types. Those two features result in pros and cons at the same time.

- It is very efficient for index-based accessor methods.

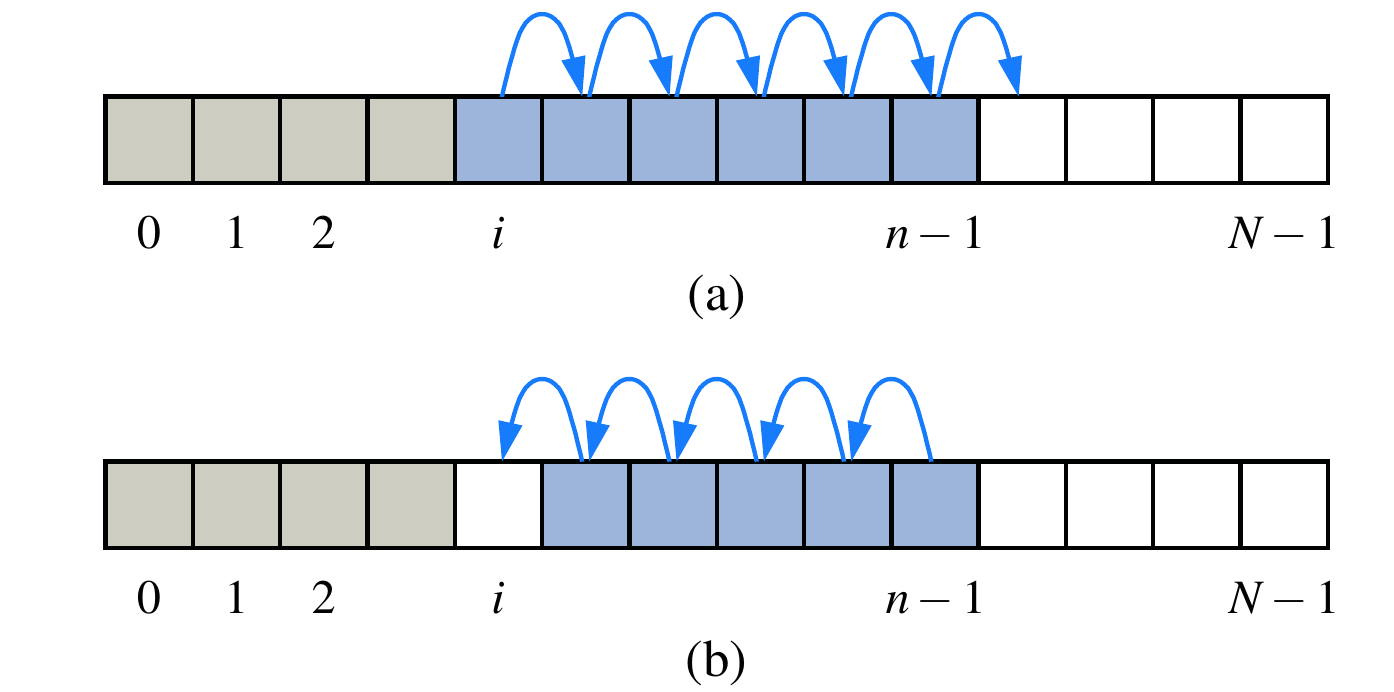

- It can be time-consuming when shifting or copying is required (e.g.,

dequeue()in array-based queue, andresize()).

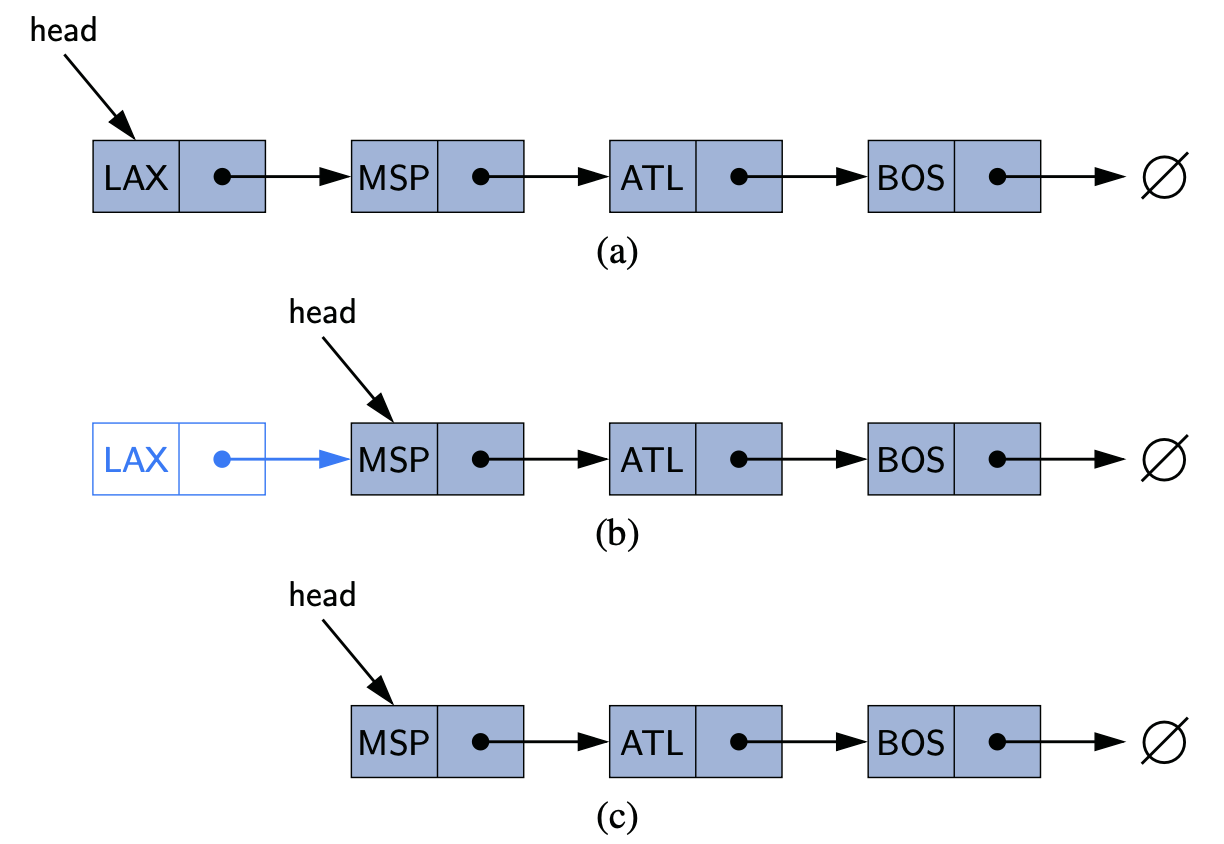

The figure2 above illustrates (a) shifting up for an insertion at index i; (b) shifting down for a removal at index i.

Once you have grasped the usages of an array, you are able to implement your own ArrayList in Java, and this is left as an exercise.

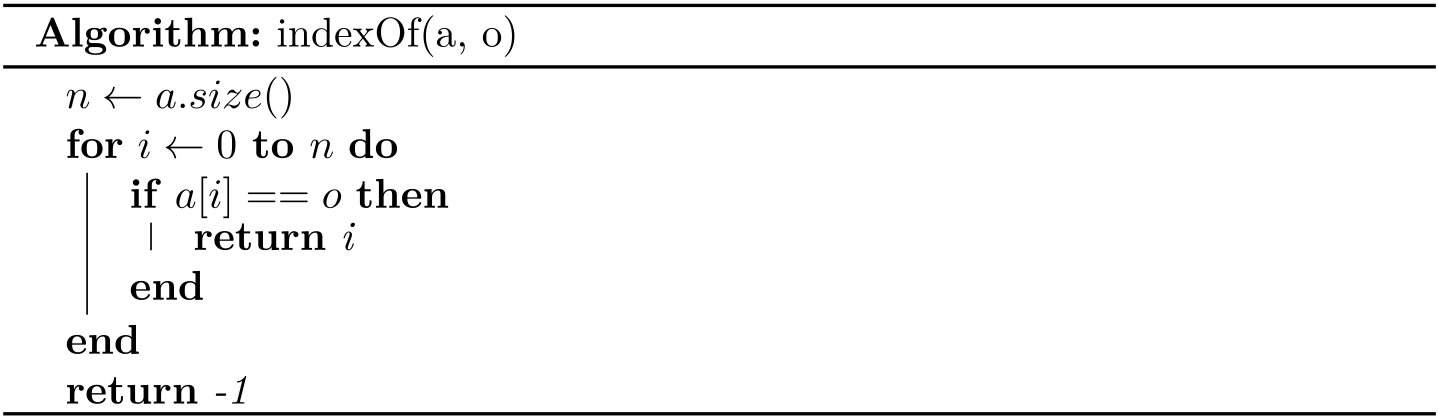

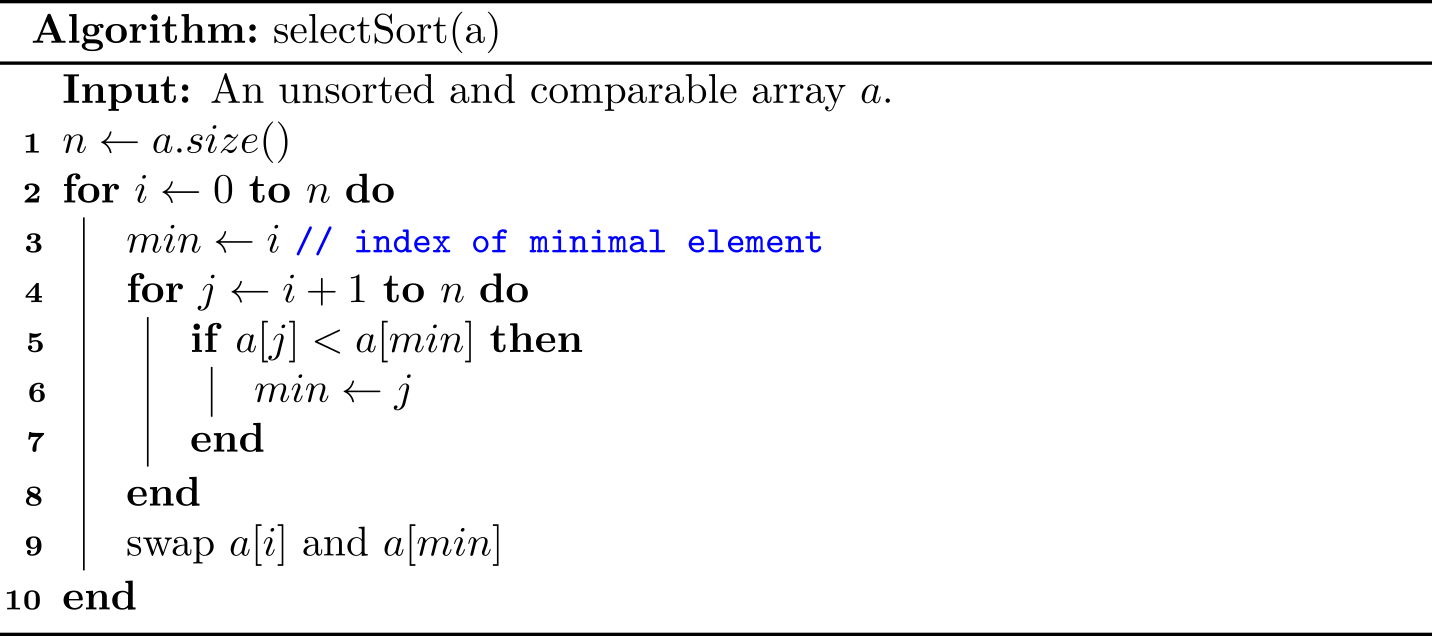

Pseudo code

Previously, we use either plain English or a specific programming language to describe an algorithm, but it lacks of generality to some extent. This is because data structures and algorithms can be virtually written in all programming languages3. Therefore, we are seeking for a more general approach. The plain English is a candidate method, but it is overly verbose. More importantly, it is not accurate.

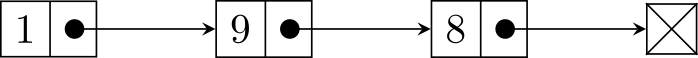

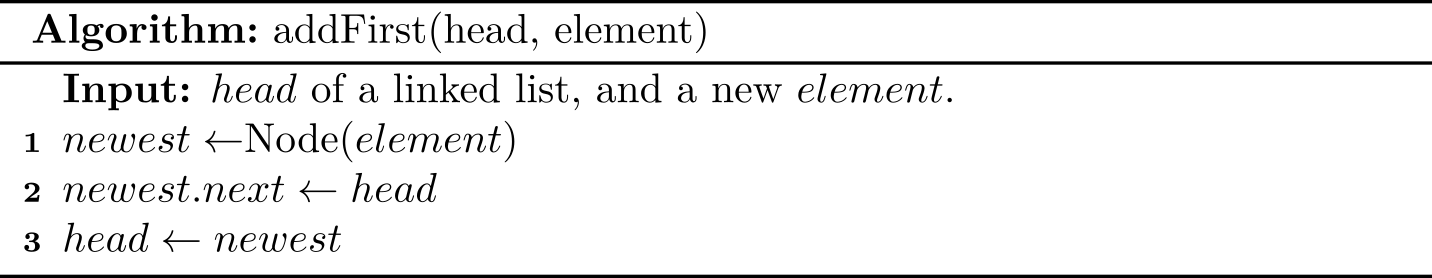

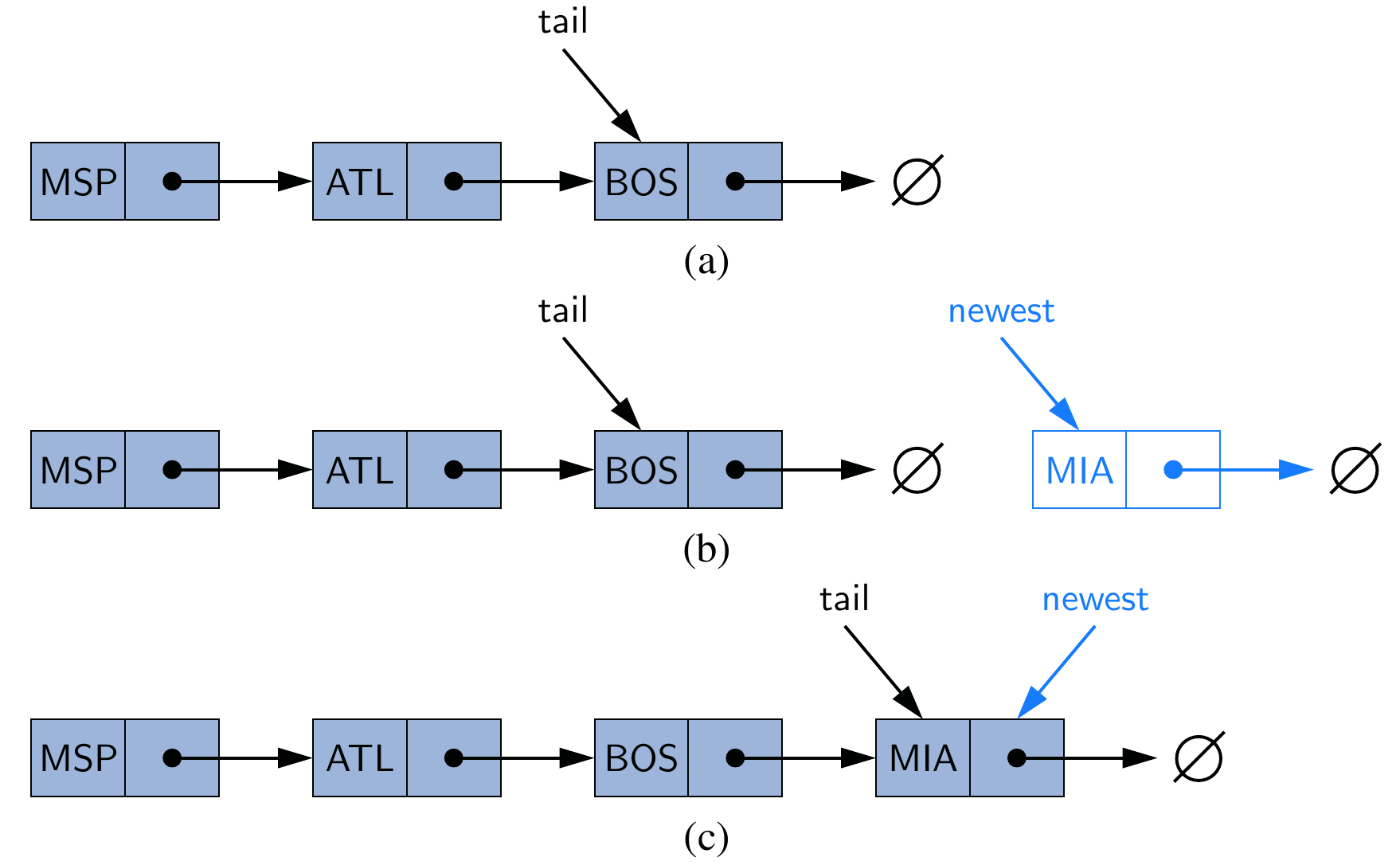

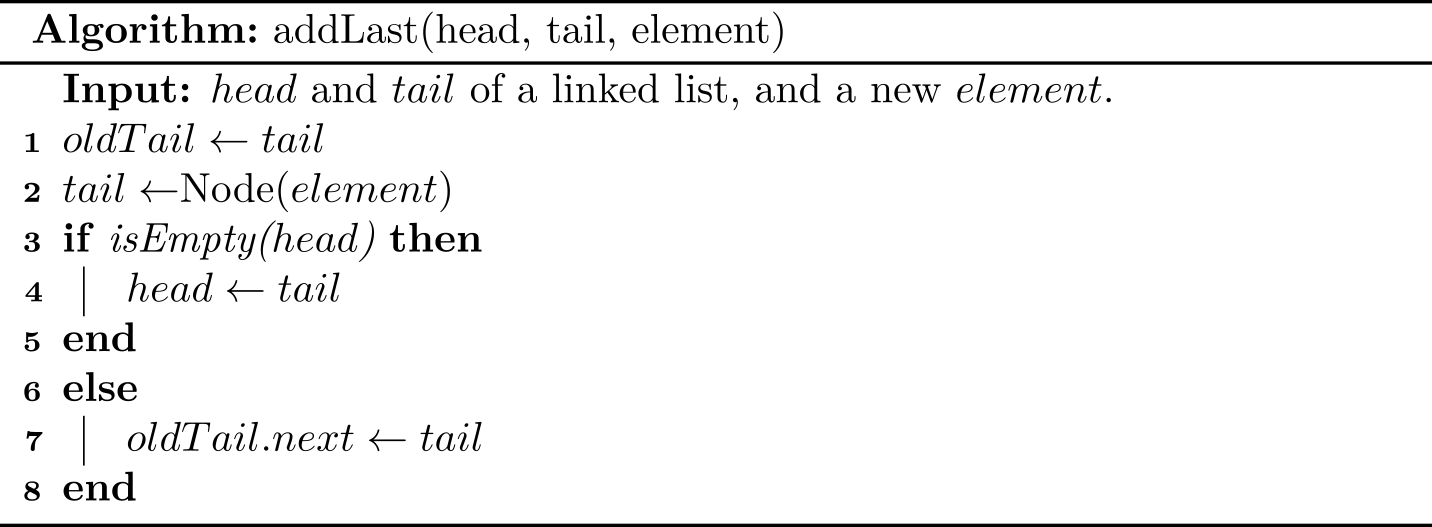

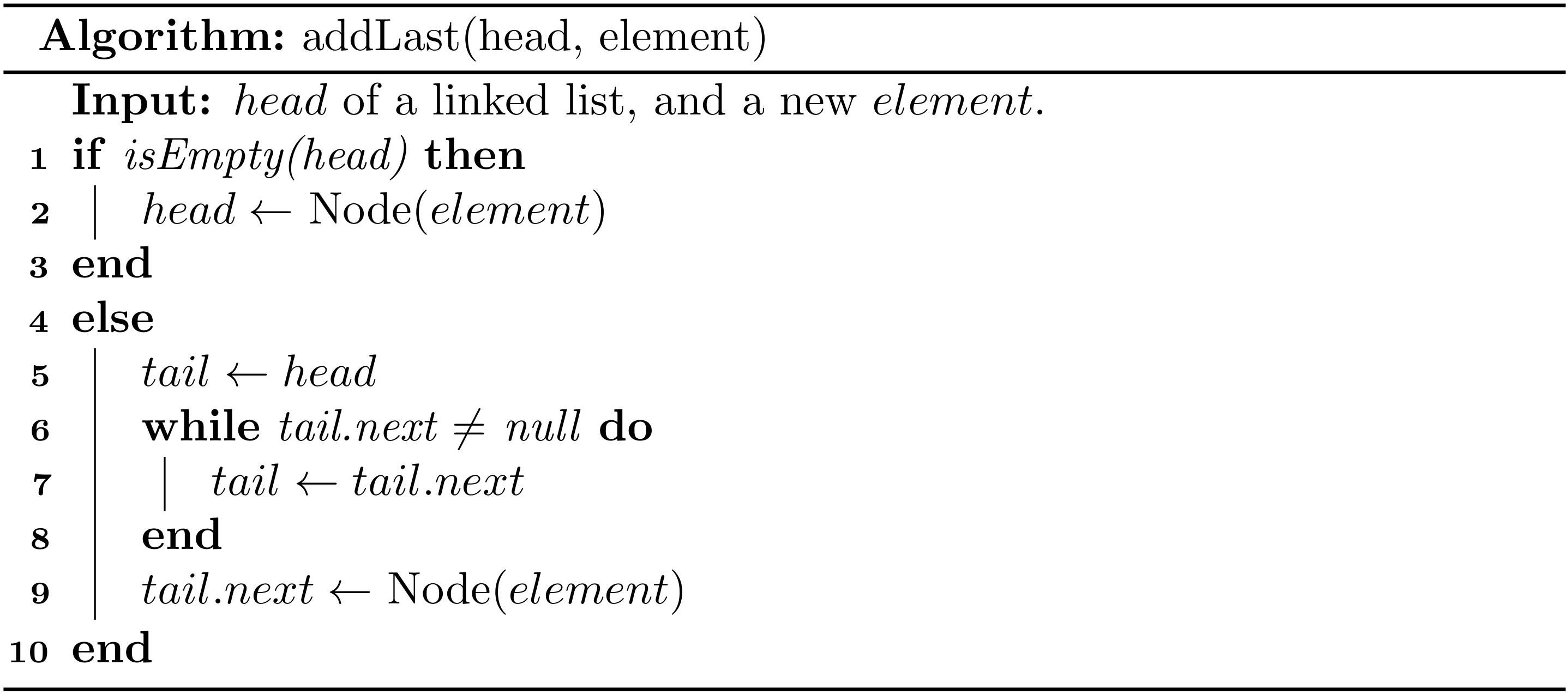

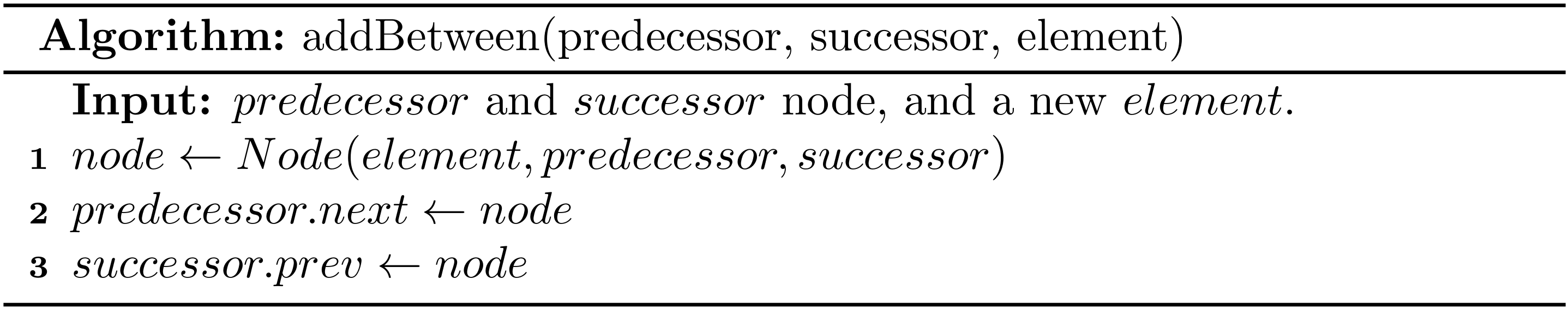

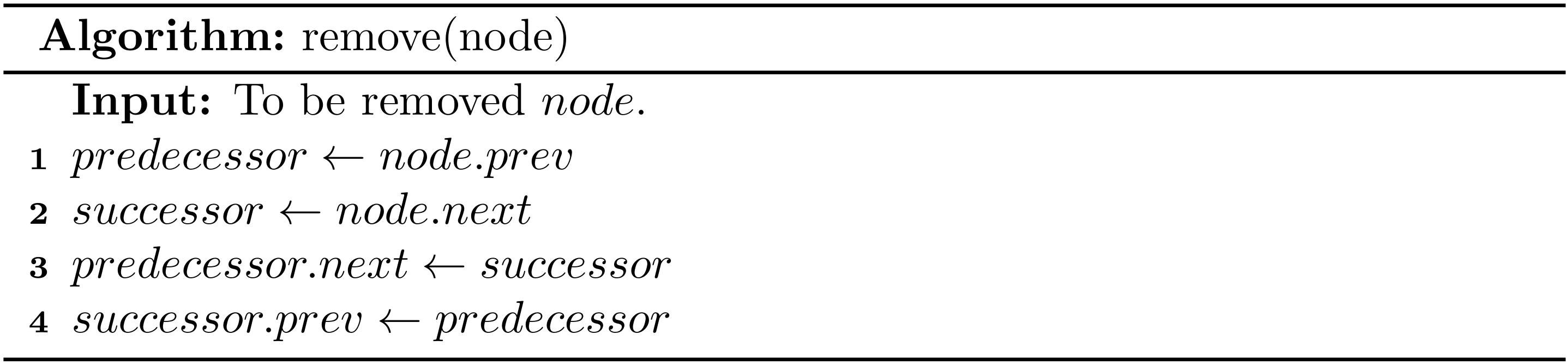

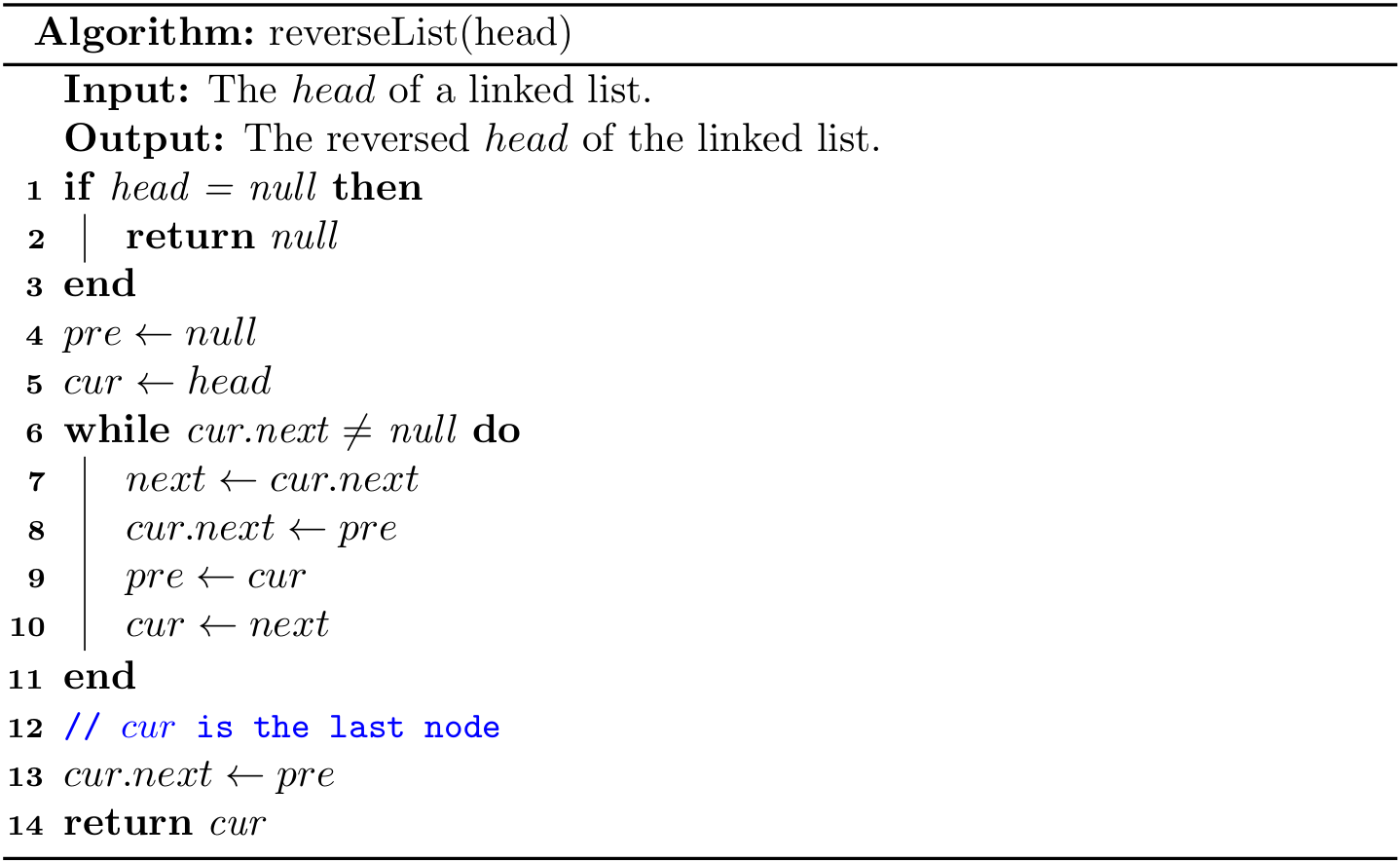

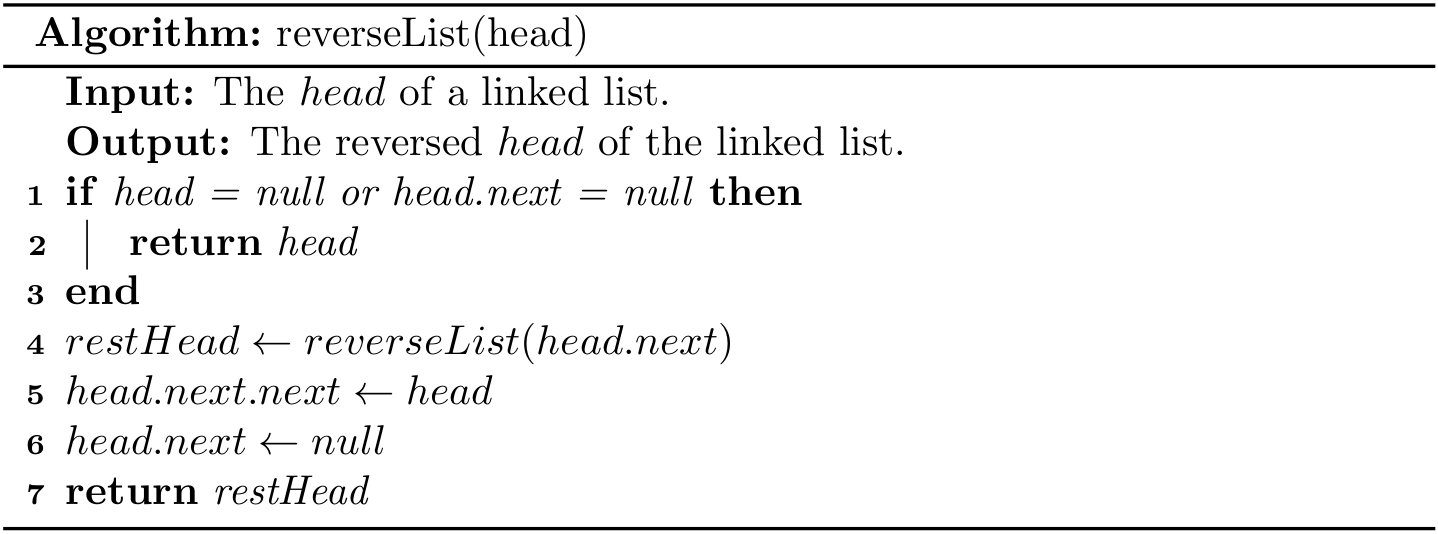

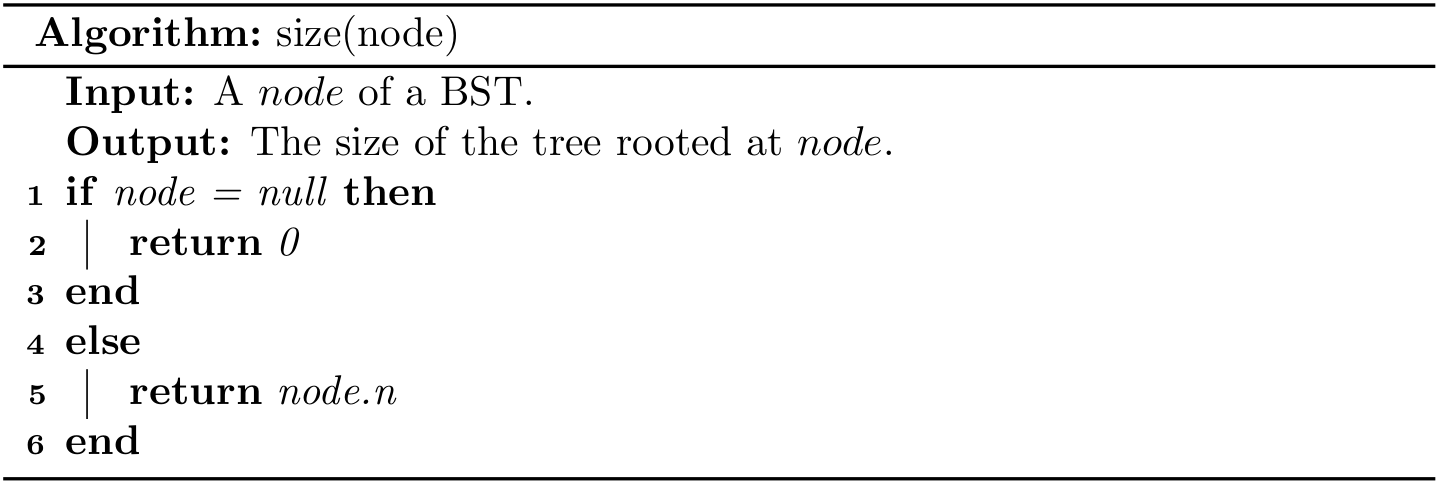

In this subsection, we are going to study the pseudo-code programming. The term pseudo-code refers to an informal, English-like notation for describing how an algorithm, a method, a class, or a program will work.